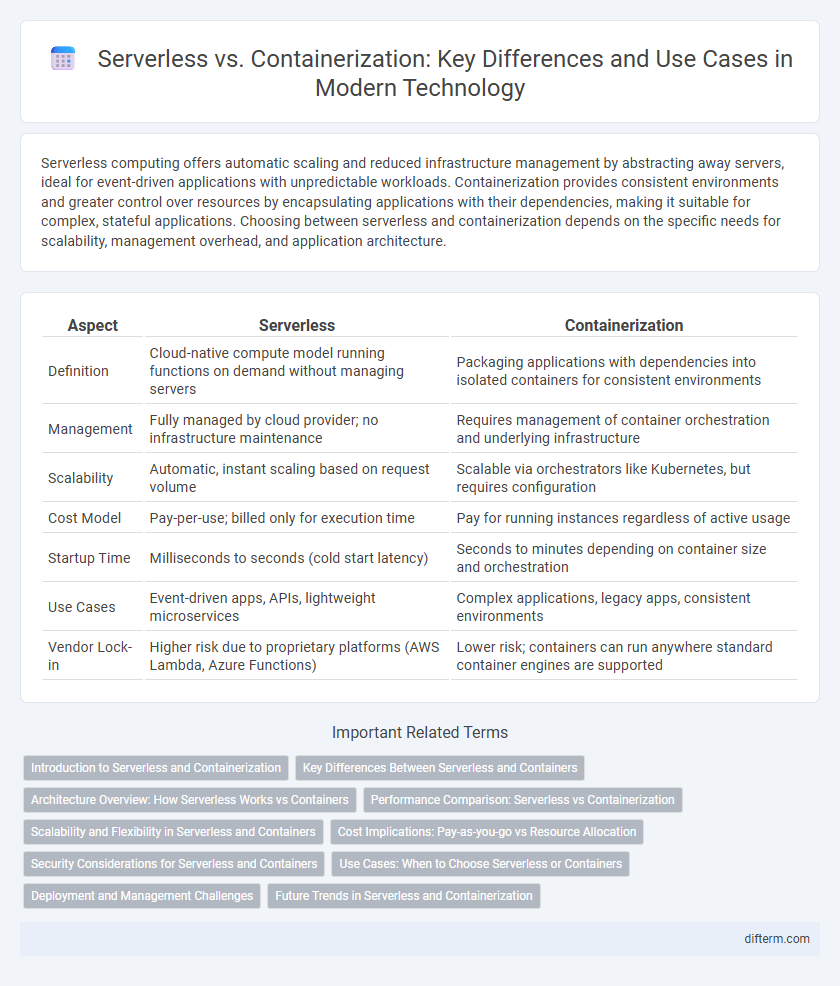

Serverless computing offers automatic scaling and reduced infrastructure management by abstracting away servers, ideal for event-driven applications with unpredictable workloads. Containerization provides consistent environments and greater control over resources by encapsulating applications with their dependencies, making it suitable for complex, stateful applications. Choosing between serverless and containerization depends on the specific needs for scalability, management overhead, and application architecture.

Table of Comparison

| Aspect | Serverless | Containerization |

|---|---|---|

| Definition | Cloud-native compute model running functions on demand without managing servers | Packaging applications with dependencies into isolated containers for consistent environments |

| Management | Fully managed by cloud provider; no infrastructure maintenance | Requires management of container orchestration and underlying infrastructure |

| Scalability | Automatic, instant scaling based on request volume | Scalable via orchestrators like Kubernetes, but requires configuration |

| Cost Model | Pay-per-use; billed only for execution time | Pay for running instances regardless of active usage |

| Startup Time | Milliseconds to seconds (cold start latency) | Seconds to minutes depending on container size and orchestration |

| Use Cases | Event-driven apps, APIs, lightweight microservices | Complex applications, legacy apps, consistent environments |

| Vendor Lock-in | Higher risk due to proprietary platforms (AWS Lambda, Azure Functions) | Lower risk; containers can run anywhere standard container engines are supported |

Introduction to Serverless and Containerization

Serverless computing allows developers to deploy code without managing underlying infrastructure, enabling automatic scaling and billing based on execution time. Containerization packages applications with their dependencies into lightweight, portable containers, ensuring consistent environments across development and production. Both technologies enhance agility, but serverless abstracts infrastructure management while containers provide more control over runtime environments.

Key Differences Between Serverless and Containers

Serverless computing abstracts server management by automatically scaling functions in response to demand, enabling developers to focus solely on code without provisioning infrastructure. Containerization packages applications and their dependencies into isolated environments, providing greater control over deployment, scalability, and runtime consistency. Key differences include operational complexity, with serverless offering simplified management versus containers' requirement for orchestration tools like Kubernetes, and billing models, where serverless charges per execution while containers incur costs based on allocated resources.

Architecture Overview: How Serverless Works vs Containers

Serverless architecture operates by abstracting infrastructure management, automatically scaling functions in response to events without requiring server provisioning or maintenance. Containers encapsulate applications and their dependencies into isolated environments, enabling consistent deployment across various platforms with explicit control over resource allocation and lifecycle. While serverless emphasizes event-driven execution and managed scaling, containers offer greater customization and persistent runtimes within orchestrated clusters like Kubernetes.

Performance Comparison: Serverless vs Containerization

Serverless architecture offers dynamic scaling and rapid deployment, reducing latency for variable workloads without requiring resource management. Containerization provides consistent performance with predictable resource allocation, suitable for high-demand applications needing fine-grained control over environment configurations. Benchmark tests show serverless functions excel in event-driven, intermittent tasks, while containers perform better in sustained, compute-intensive operations.

Scalability and Flexibility in Serverless and Containers

Serverless computing offers automatic scalability by dynamically allocating resources based on demand, eliminating the need for manual intervention. Containers provide flexibility through consistent environments and support for microservices, allowing developers to scale applications horizontally with precise control. While serverless excels in handling unpredictable workloads, containers enable fine-tuned scalability and customization for complex applications.

Cost Implications: Pay-as-you-go vs Resource Allocation

Serverless computing offers a pay-as-you-go pricing model, charging only for actual execution time and resource usage, which can reduce costs for variable workloads with unpredictable demand. Containerization requires upfront resource allocation, leading to fixed costs regardless of usage, but allows better cost control for steady, predictable workloads through optimized infrastructure utilization. Evaluating cost implications depends on workload patterns, scalability needs, and budget constraints to determine the most cost-effective deployment option.

Security Considerations for Serverless and Containers

Serverless architectures minimize attack surfaces by abstracting infrastructure management, but they introduce risks such as function event injection and inadequate identity management. Containerization offers isolated environments that enhance security through namespace separation and resource limitations, yet vulnerabilities arise from misconfigured container runtimes and insecure images. Effective security for both models requires rigorous access controls, regular patching, and comprehensive monitoring to detect and mitigate threats in dynamic cloud environments.

Use Cases: When to Choose Serverless or Containers

Serverless architecture excels in event-driven applications, microservices with unpredictable workloads, and rapid development environments that require automatic scaling without infrastructure management. Containers are ideal for complex, stateful applications needing consistent runtime environments, hybrid cloud deployments, and microservices requiring fine-grained control over resource allocation. Choosing serverless or containers depends on factors like workload variability, management overhead, and operational complexity specific to the application's deployment and scaling needs.

Deployment and Management Challenges

Serverless deployment eliminates server management by abstracting infrastructure, enabling rapid scaling and simplified operations but may introduce challenges in cold start latency and limited control over execution environments. Containerization offers greater control and consistency across development, testing, and production environments, yet demands complex orchestration and resource management using tools like Kubernetes. Balancing these approaches requires evaluating trade-offs in deployment speed, operational overhead, and scalability needs specific to application workloads.

Future Trends in Serverless and Containerization

Future trends in serverless computing emphasize increased integration with artificial intelligence and machine learning, enabling more intelligent, event-driven applications with reduced operational complexity. Containerization is evolving towards enhanced orchestration tools like Kubernetes, improved multi-cloud support, and greater security features to facilitate scalable, portable microservices architectures. The convergence of serverless and containerization technologies points to hybrid models that optimize resource efficiency and application deployment speed in cloud-native environments.

Serverless vs Containerization Infographic

difterm.com

difterm.com