Tokenization replaces sensitive pet security data with non-sensitive equivalents called tokens, ensuring that the original information is stored securely in a separate system. Masking conceals sensitive data by obscuring it with characters or symbols, allowing limited data visibility without exposing the actual content. Both methods enhance pet security by reducing exposure risks, but tokenization offers stronger protection for data at rest, while masking is ideal for maintaining usability in real-time access scenarios.

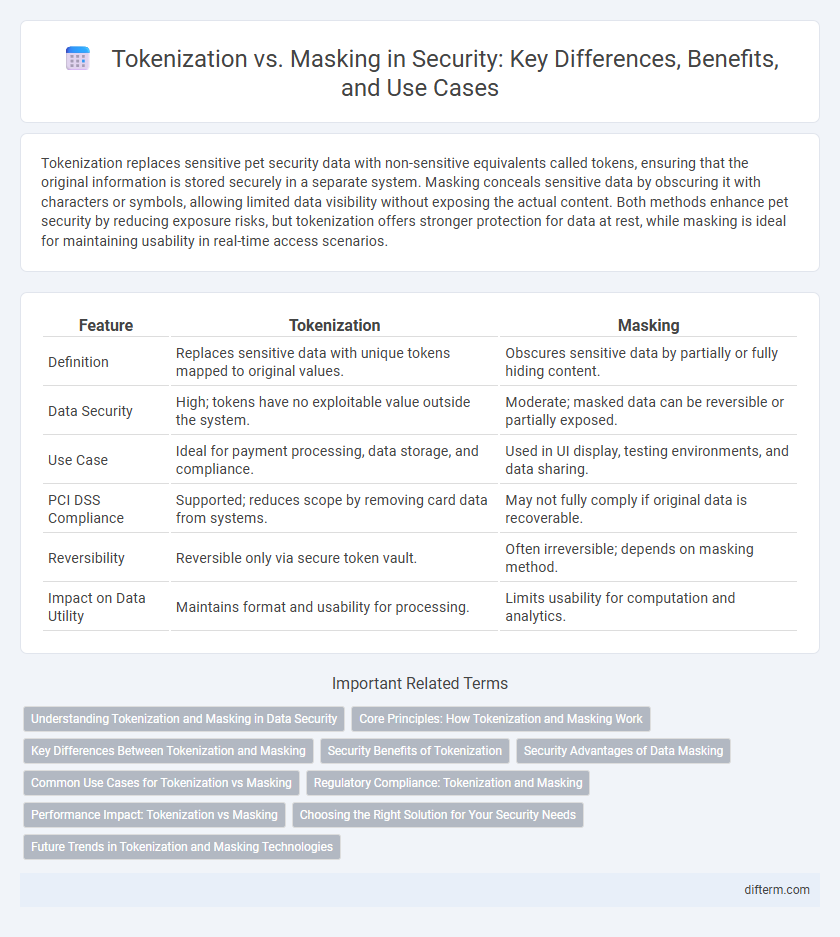

Table of Comparison

| Feature | Tokenization | Masking |

|---|---|---|

| Definition | Replaces sensitive data with unique tokens mapped to original values. | Obscures sensitive data by partially or fully hiding content. |

| Data Security | High; tokens have no exploitable value outside the system. | Moderate; masked data can be reversible or partially exposed. |

| Use Case | Ideal for payment processing, data storage, and compliance. | Used in UI display, testing environments, and data sharing. |

| PCI DSS Compliance | Supported; reduces scope by removing card data from systems. | May not fully comply if original data is recoverable. |

| Reversibility | Reversible only via secure token vault. | Often irreversible; depends on masking method. |

| Impact on Data Utility | Maintains format and usability for processing. | Limits usability for computation and analytics. |

Understanding Tokenization and Masking in Data Security

Tokenization replaces sensitive data with unique identification symbols that retain essential information without compromising security, ensuring data is protected both at rest and in transit. Masking obscures data by altering or redacting specific characters to prevent unauthorized access while maintaining usability for testing or analysis. Understanding the distinct applications and benefits of tokenization and masking is crucial for implementing effective data security strategies that comply with industry regulations.

Core Principles: How Tokenization and Masking Work

Tokenization replaces sensitive data with unique, non-sensitive tokens that preserve the original data format without exposing actual values, enhancing data security in databases and transactions. Masking conceals data by obscuring or redacting portions of the information, ensuring sensitive details remain hidden during processing or display. Both methods operate on the core principle of protecting sensitive data from unauthorized access while maintaining usability for authorized operations.

Key Differences Between Tokenization and Masking

Tokenization replaces sensitive data with unique identification symbols that retain essential information without exposing actual values, while masking obscures data by altering its appearance to prevent unauthorized access. Tokenization creates reversible mappings stored securely, enabling data restoration, whereas masking is often irreversible and used primarily for data protection during display or testing. The key differences include tokenization's utility in secure data storage and transmission, contrasted with masking's focus on privacy during data use and sharing.

Security Benefits of Tokenization

Tokenization enhances security by replacing sensitive data with non-sensitive tokens, eliminating the risk of exposure if intercepted. Unlike masking, which hides data but retains its structure, tokenization ensures that the original information is irretrievable without the tokenization system's secret mapping. This method dramatically reduces the attack surface and helps businesses comply with stringent data protection regulations such as PCI DSS and GDPR.

Security Advantages of Data Masking

Data masking enhances security by transforming sensitive information into realistic but fictitious data, thereby reducing the risk of exposure during testing and development without compromising data integrity. Unlike tokenization, data masking allows for safe data usage across multiple environments while maintaining format and usability, minimizing the attack surface for unauthorized access. This approach ensures compliance with privacy regulations like GDPR and HIPAA by protecting personally identifiable information (PII) and sensitive financial data from insider threats and external breaches.

Common Use Cases for Tokenization vs Masking

Tokenization is commonly used in payment processing systems to replace sensitive cardholder data with non-sensitive tokens, ensuring secure transactions and compliance with PCI DSS standards. Masking is typically applied in data analytics, software testing, and customer support environments to obscure sensitive information like Social Security numbers or personal identifiers while maintaining data usability. Both techniques enhance data privacy but are chosen based on specific security needs and operational contexts.

Regulatory Compliance: Tokenization and Masking

Tokenization replaces sensitive data with non-sensitive tokens, ensuring compliance with PCI DSS and GDPR by minimizing exposure of protected information. Masking obscures data within systems to prevent unauthorized access but may still retain original data in secure environments, requiring strict access controls to meet HIPAA standards. Effective regulatory compliance often involves integrating tokenization for data-at-rest protection and masking for controlled data usage in development or testing environments.

Performance Impact: Tokenization vs Masking

Tokenization enhances security by replacing sensitive data with non-sensitive tokens, minimizing performance overhead due to its stateless processing nature, which allows faster data retrieval and less impact on system resources. Masking, on the other hand, can introduce higher latency as it requires real-time data modification to obfuscate sensitive information, potentially slowing down data access and query operations. Organizations prioritizing performance should consider tokenization for large-scale environments requiring quick data throughput with robust privacy protection.

Choosing the Right Solution for Your Security Needs

Tokenization replaces sensitive data with unique identifiers, ensuring original data is stored securely offsite, making it ideal for compliance-heavy industries like finance and healthcare. Masking conceals data by altering its appearance while retaining usability, suitable for non-production environments such as development and testing. Selecting between tokenization and masking depends on regulatory requirements, data usage patterns, and the balance between security and operational needs.

Future Trends in Tokenization and Masking Technologies

Future trends in tokenization emphasize integration with AI-driven analytics to enhance real-time data protection and threat detection, while masking technologies are evolving towards dynamic, context-aware solutions that adapt based on user roles and data sensitivity. Advances in homomorphic encryption and secure multi-party computation are expected to complement tokenization by enabling secure data processing without exposure. The convergence of these technologies will drive more robust, scalable, and compliant data security frameworks in cloud-native and hybrid environments.

Tokenization vs Masking Infographic

difterm.com

difterm.com