AI training involves teaching models to recognize patterns by processing vast datasets and adjusting neural network weights, requiring significant computational power and time. AI inference uses these trained models to make predictions or decisions in real-time, demanding faster processing but less computational intensity than training. Optimizing hardware and software for inference enhances responsiveness and energy efficiency in AI applications.

Table of Comparison

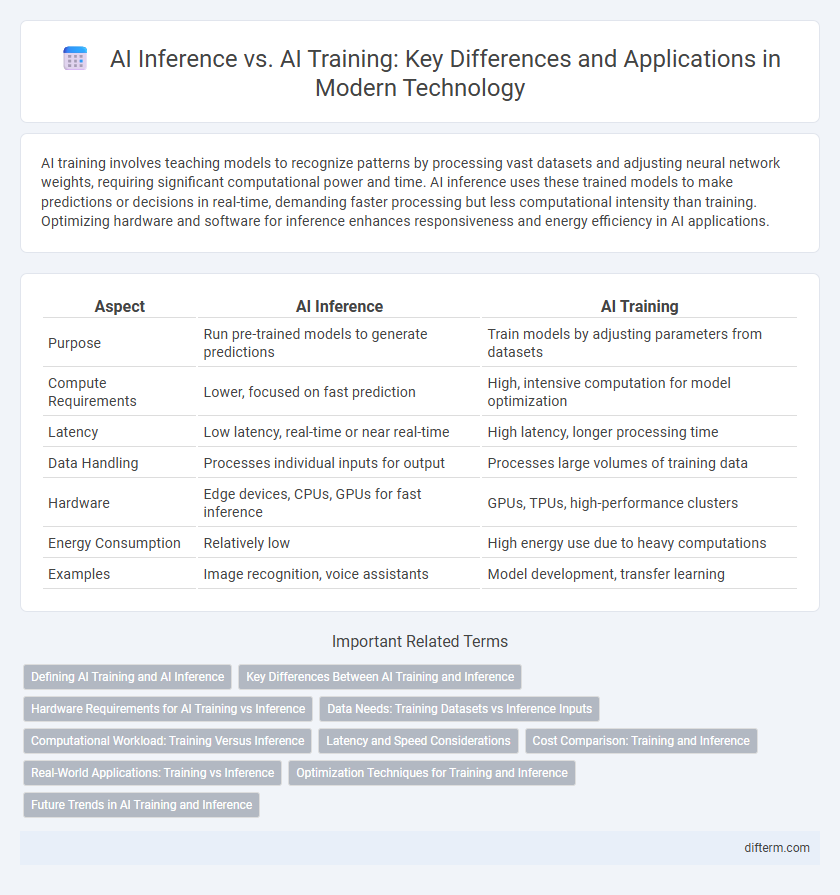

| Aspect | AI Inference | AI Training |

|---|---|---|

| Purpose | Run pre-trained models to generate predictions | Train models by adjusting parameters from datasets |

| Compute Requirements | Lower, focused on fast prediction | High, intensive computation for model optimization |

| Latency | Low latency, real-time or near real-time | High latency, longer processing time |

| Data Handling | Processes individual inputs for output | Processes large volumes of training data |

| Hardware | Edge devices, CPUs, GPUs for fast inference | GPUs, TPUs, high-performance clusters |

| Energy Consumption | Relatively low | High energy use due to heavy computations |

| Examples | Image recognition, voice assistants | Model development, transfer learning |

Defining AI Training and AI Inference

AI training involves feeding large datasets into machine learning models to adjust their parameters for accurate pattern recognition, while AI inference utilizes the trained model to analyze new data and generate predictions or decisions in real-time environments. Training requires substantial computational resources and time, typically performed on specialized hardware like GPUs or TPUs, whereas inference emphasizes low latency and efficiency for deployment in applications such as voice assistants and autonomous systems. Understanding the distinction between training and inference is critical for optimizing AI workflows and hardware selection.

Key Differences Between AI Training and Inference

AI training involves building and optimizing models by processing large datasets through complex algorithms to adjust internal parameters, requiring significant computational power and time. Inference uses the trained model to make predictions or decisions on new, unseen data rapidly with lower resource demands. The key differences lie in their purpose--training focuses on learning and model creation, while inference emphasizes real-time application and scalability.

Hardware Requirements for AI Training vs Inference

AI training demands high-performance GPUs or TPUs with extensive memory bandwidth and parallel processing capabilities to handle massive datasets and complex model computations. Inference hardware prioritizes lower latency and energy efficiency, often utilizing specialized accelerators like edge TPUs or FPGAs to deploy pre-trained models in real-time applications. Training infrastructure requires robust cooling and power management systems due to intensive computational loads, whereas inference setups focus on scalability and integration within diverse device environments.

Data Needs: Training Datasets vs Inference Inputs

AI training requires massive, diverse datasets to enable models to learn patterns and generalize across tasks, often involving billions of labeled examples. In contrast, AI inference relies on real-time or batch input data that is significantly smaller and processed to generate predictions or decisions quickly. Optimizing data quality and relevance during training enhances model accuracy, while efficient handling of inference inputs reduces latency and computational cost.

Computational Workload: Training Versus Inference

AI training demands extensive computational resources, often involving high-performance GPUs and TPUs to process massive datasets and optimize model parameters through iterative learning. Inference generally requires significantly less computation, focusing on real-time or near-real-time processing to generate predictions based on the trained model. Efficient inference solutions prioritize low latency and energy consumption, making them suitable for deployment in edge devices and production environments.

Latency and Speed Considerations

AI inference requires low latency to deliver real-time responses, often demanding optimized hardware like GPUs or specialized AI accelerators to speed up data processing. AI training involves extensive computational resources and longer processing time due to large datasets and complex model updates, prioritizing throughput over immediate speed. Balancing latency and speed considerations is crucial for deploying efficient AI systems tailored to specific application needs.

Cost Comparison: Training and Inference

AI training demands extensive computational resources and energy consumption, resulting in significantly higher costs compared to inference. Inference operates with lower latency and reduced power requirements, making it more cost-effective for deployment in real-time applications. Cost optimization strategies prioritize allocating budget toward efficient inference hardware while leveraging cloud solutions for intensive training workloads.

Real-World Applications: Training vs Inference

AI training involves processing large datasets to develop models capable of recognizing patterns and making decisions, which requires significant computational power and time. Inference applies these trained models to real-world scenarios, enabling instant decision-making and predictions on edge devices or cloud services with lower latency and resource requirements. Real-world applications prioritize efficient inference for tasks like autonomous driving, natural language processing, and personalized recommendations, while training remains a backend process essential for continuous model improvement.

Optimization Techniques for Training and Inference

AI training optimization techniques prioritize data parallelism, mixed precision, and adaptive learning rates to accelerate model convergence and enhance computational efficiency. Inference optimization focuses on model quantization, pruning, and hardware-specific acceleration to reduce latency and energy consumption while maintaining accuracy. Techniques like knowledge distillation bridge training and inference by transferring learned representations to lightweight models optimized for real-time deployment.

Future Trends in AI Training and Inference

Future trends in AI training emphasize the integration of more efficient algorithms and specialized hardware accelerators, enabling faster model convergence and reduced energy consumption. In AI inference, developments focus on edge computing and real-time processing capabilities to support low-latency applications across IoT devices and autonomous systems. Advances in federated learning and transfer learning are set to enhance both training and inference by enabling privacy-preserving, scalable, and adaptive AI deployments.

AI inference vs AI training Infographic

difterm.com

difterm.com