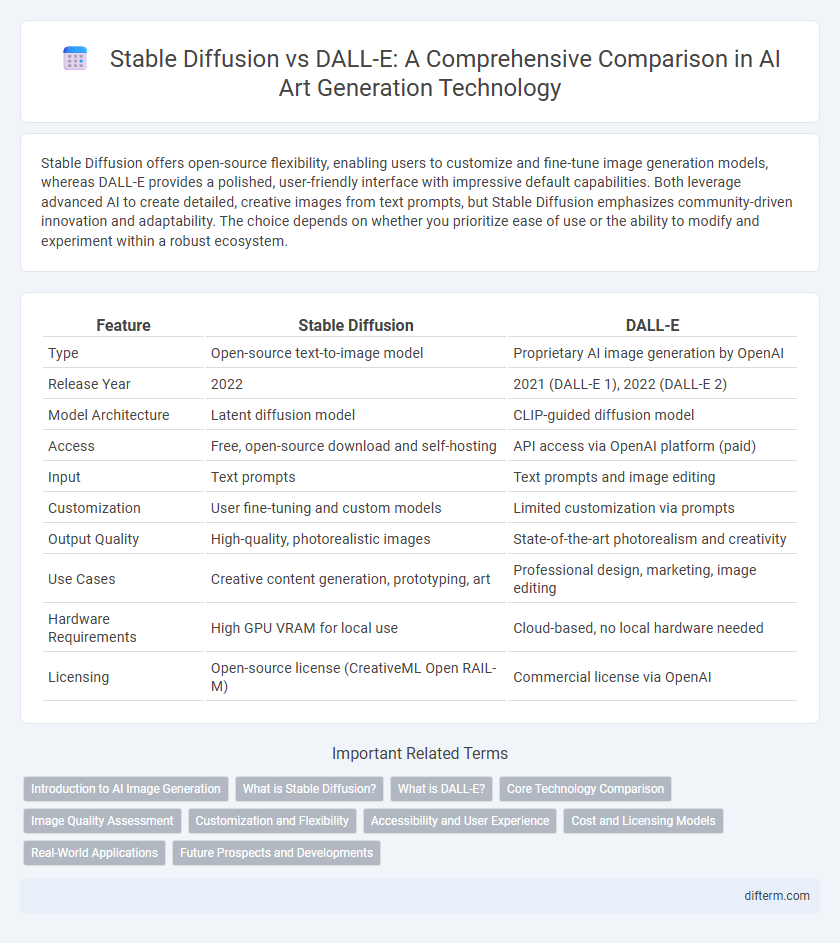

Stable Diffusion offers open-source flexibility, enabling users to customize and fine-tune image generation models, whereas DALL-E provides a polished, user-friendly interface with impressive default capabilities. Both leverage advanced AI to create detailed, creative images from text prompts, but Stable Diffusion emphasizes community-driven innovation and adaptability. The choice depends on whether you prioritize ease of use or the ability to modify and experiment within a robust ecosystem.

Table of Comparison

| Feature | Stable Diffusion | DALL-E |

|---|---|---|

| Type | Open-source text-to-image model | Proprietary AI image generation by OpenAI |

| Release Year | 2022 | 2021 (DALL-E 1), 2022 (DALL-E 2) |

| Model Architecture | Latent diffusion model | CLIP-guided diffusion model |

| Access | Free, open-source download and self-hosting | API access via OpenAI platform (paid) |

| Input | Text prompts | Text prompts and image editing |

| Customization | User fine-tuning and custom models | Limited customization via prompts |

| Output Quality | High-quality, photorealistic images | State-of-the-art photorealism and creativity |

| Use Cases | Creative content generation, prototyping, art | Professional design, marketing, image editing |

| Hardware Requirements | High GPU VRAM for local use | Cloud-based, no local hardware needed |

| Licensing | Open-source license (CreativeML Open RAIL-M) | Commercial license via OpenAI |

Introduction to AI Image Generation

AI image generation employs advanced neural networks to create visuals from textual descriptions, with Stable Diffusion and DALL-E as leading models. Stable Diffusion utilizes latent diffusion techniques to generate high-quality, diverse images efficiently and is open-source, enabling widespread customization and integration. DALL-E, developed by OpenAI, leverages transformer models trained on extensive datasets to produce imaginative and coherent images, excelling in understanding complex prompts and fine details.

What is Stable Diffusion?

Stable Diffusion is an open-source deep learning model designed for generating high-quality, photorealistic images from textual descriptions using latent diffusion techniques. It operates by progressively refining images in a latent space, enabling faster and more efficient image synthesis compared to traditional pixel-space methods. This approach allows Stable Diffusion to create diverse and detailed visuals while requiring significantly less computational power than models like DALL-E.

What is DALL-E?

DALL-E is an advanced artificial intelligence model developed by OpenAI that generates high-quality images from textual descriptions using a neural network architecture based on GPT-3. It combines natural language processing and computer vision to create novel and diverse visuals, enabling applications in design, advertising, and creative industries. By leveraging transformer models and extensive training datasets, DALL-E excels in synthesizing realistic images from complex prompts with remarkable detail and accuracy.

Core Technology Comparison

Stable Diffusion utilizes latent diffusion models that compress image data into a lower-dimensional latent space, enabling faster and more memory-efficient image generation compared to DALL-E's autoregressive transformer architecture. DALL-E primarily relies on a transformer-based approach that generates images pixel-by-pixel from textual prompts, emphasizing high-quality, coherent image synthesis with rich contextual understanding. Both models leverage deep learning and large-scale training datasets, but Stable Diffusion's focus on latent space optimization offers greater scalability and accessibility for open-source image generation applications.

Image Quality Assessment

Stable Diffusion consistently delivers high-resolution images with fine-grained details, leveraging latent space exploration that enhances texture and color accuracy. In contrast, DALL-E excels in creative image synthesis with coherent object structures but may produce slightly lower sharpness and subtle artifacts. Image quality assessment metrics such as PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index) often favor Stable Diffusion for clarity, while DALL-E scores well in perceptual fidelity and diversity of generated content.

Customization and Flexibility

Stable Diffusion offers advanced customization through open-source access, enabling users to fine-tune models and adjust parameters for tailored image generation. DALL-E provides a user-friendly interface with fixed model capabilities, limiting flexibility but ensuring consistent output quality. The open architecture of Stable Diffusion supports extensive modifications, making it preferable for developers seeking adaptable AI creativity tools.

Accessibility and User Experience

Stable Diffusion offers an open-source model that enables wider accessibility for developers and artists seeking customizable AI-generated images, while DALL-E provides a polished, user-friendly platform with streamlined interfaces for casual users. Stable Diffusion requires more technical knowledge to deploy but grants flexibility in fine-tuning and integration, contrasting with DALL-E's cloud-based service designed for immediate use without coding. User experience in DALL-E emphasizes simplicity and convenience, whereas Stable Diffusion caters to advanced users prioritizing control and adaptability.

Cost and Licensing Models

Stable Diffusion offers an open-source licensing model, allowing developers and artists to use and modify the software with minimal upfront costs, promoting widespread adoption and innovation. In contrast, DALL-E operates under a proprietary licensing model with usage-based pricing, requiring users to pay for API access or credits, which may limit accessibility for small projects or individual users. Cost efficiency and licensing flexibility make Stable Diffusion a preferred option for budget-conscious creators and organizations seeking customization without restrictive fees.

Real-World Applications

Stable Diffusion excels in flexible image generation, enabling customized content creation for graphic design, fashion, and advertising industries with open-source accessibility. DALL-E offers highly detailed and imaginative visuals ideal for marketing, entertainment, and concept art, leveraging advanced AI to create lifelike and inventive imagery. Both technologies drive innovation in real-world applications, enhancing creative workflows and expanding the potential of automated visual content generation.

Future Prospects and Developments

Stable Diffusion's open-source architecture fosters rapid innovation and customization, making it a versatile tool for future AI-driven creativity across industries. DALL-E's integration within the broader OpenAI ecosystem leverages advancements in multimodal learning and natural language understanding to enhance image generation capabilities. Both platforms are poised to benefit from ongoing research in efficiency, realism, and user accessibility, driving the next wave of AI-generated visual content.

Stable diffusion vs DALL-E Infographic

difterm.com

difterm.com