Serverless architecture eliminates the need for managing physical servers by automatically scaling resources based on demand, reducing operational overhead and costs. Traditional servers require manual configuration, maintenance, and capacity planning, which can lead to inefficiencies and higher expenses. This shift enables faster deployment and improved scalability while optimizing resource utilization in modern cloud environments.

Table of Comparison

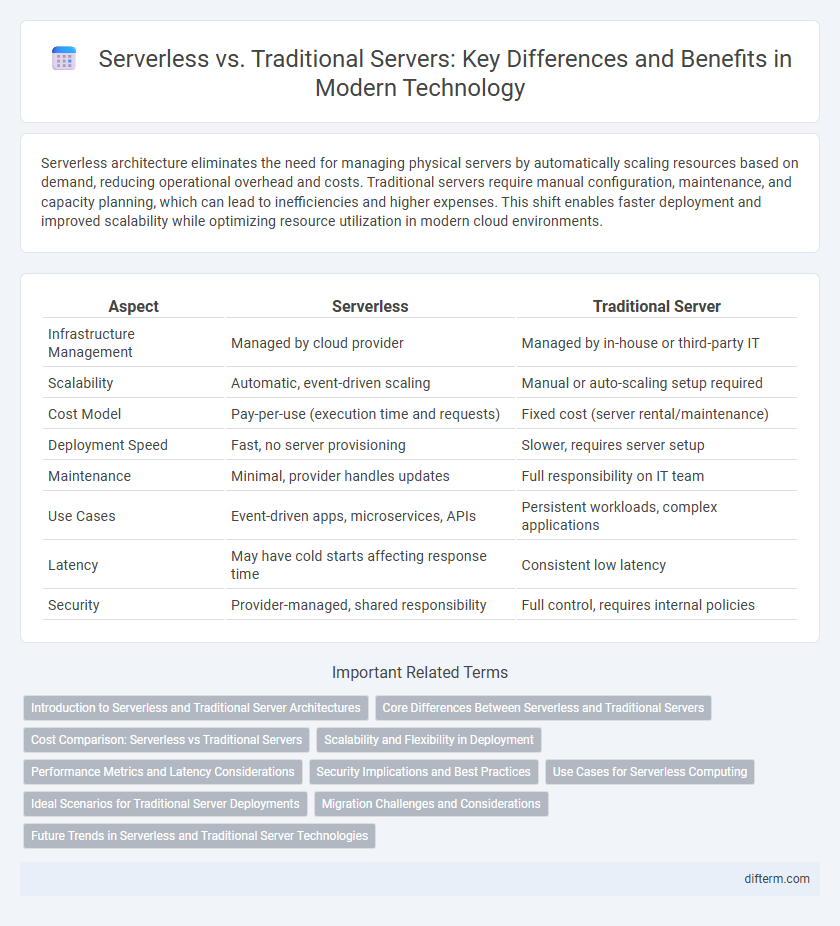

| Aspect | Serverless | Traditional Server |

|---|---|---|

| Infrastructure Management | Managed by cloud provider | Managed by in-house or third-party IT |

| Scalability | Automatic, event-driven scaling | Manual or auto-scaling setup required |

| Cost Model | Pay-per-use (execution time and requests) | Fixed cost (server rental/maintenance) |

| Deployment Speed | Fast, no server provisioning | Slower, requires server setup |

| Maintenance | Minimal, provider handles updates | Full responsibility on IT team |

| Use Cases | Event-driven apps, microservices, APIs | Persistent workloads, complex applications |

| Latency | May have cold starts affecting response time | Consistent low latency |

| Security | Provider-managed, shared responsibility | Full control, requires internal policies |

Introduction to Serverless and Traditional Server Architectures

Serverless architecture eliminates the need for managing physical servers by automatically allocating resources and scaling based on demand, offering flexibility and cost efficiency for developers. Traditional server architecture relies on dedicated physical or virtual servers that require manual configuration, maintenance, and fixed resource allocation. Serverless platforms like AWS Lambda, Azure Functions, and Google Cloud Functions enable event-driven execution, while traditional servers remain foundational for applications demanding consistent resource availability and control.

Core Differences Between Serverless and Traditional Servers

Serverless architecture abstracts server management by automatically provisioning and scaling resources, enabling developers to focus solely on code execution without handling infrastructure. Traditional servers require manual setup, maintenance, and capacity planning, often leading to fixed resource allocation regardless of actual usage. Serverless models operate on a pay-per-use basis, optimizing cost and scalability, whereas traditional servers incur constant expenses regardless of workload fluctuations.

Cost Comparison: Serverless vs Traditional Servers

Serverless computing reduces infrastructure costs by charging based on actual usage, eliminating expenses for idle resources commonly seen in traditional server setups. Traditional servers require upfront investment in hardware and ongoing maintenance costs regardless of workload, leading to higher fixed expenses. Serverless platforms enable businesses to achieve cost efficiency and scalability without the financial burden of over-provisioning or underutilized capacity.

Scalability and Flexibility in Deployment

Serverless architectures offer automatic scalability by dynamically allocating resources based on demand, eliminating the need for manual intervention. Traditional servers require pre-provisioning and capacity planning, which can lead to underutilization or overprovisioning during traffic spikes. Serverless solutions provide greater flexibility in deployment, enabling rapid updates and integration with cloud-native services, while traditional servers often rely on fixed infrastructure setups.

Performance Metrics and Latency Considerations

Serverless computing offers dynamic resource allocation that reduces cold start latency compared to traditional servers, which rely on fixed infrastructure, often causing higher response times during traffic spikes. Performance metrics such as throughput, CPU utilization, and memory consumption typically favor serverless architectures in scalability and cost-efficiency, whereas traditional servers provide more predictable latency and control over hardware configurations. Latency considerations must account for function initialization delays in serverless models and network overhead in both setups, impacting real-time application performance requirements.

Security Implications and Best Practices

Serverless architecture reduces attack surfaces by abstracting server management, limiting direct access to infrastructure and minimizing vulnerabilities. Traditional servers require comprehensive security measures such as patch management, firewall configuration, and intrusion detection to protect against threats. Best practices for serverless security include enforcing strict IAM policies, securing API gateways, and implementing continuous monitoring to detect anomalies in real-time.

Use Cases for Serverless Computing

Serverless computing excels in event-driven applications, real-time data processing, and microservices architectures by automatically scaling resources based on demand, reducing operational overhead. It is ideal for web and mobile backends, IoT device management, and batch processing tasks where unpredictable workloads require rapid scaling. Enterprises leverage serverless for cost efficiency, faster development cycles, and seamless integration with cloud-native services compared to traditional server infrastructure.

Ideal Scenarios for Traditional Server Deployments

Traditional server deployments excel in scenarios requiring full control over server configurations, specialized hardware integration, and predictable, high-performance workloads. They are ideal for legacy applications not optimized for cloud environments or organizations with strict compliance and security demands needing dedicated infrastructure. Enterprises benefiting from steady, consistent traffic patterns also find traditional servers cost-effective due to their fixed resource allocation.

Migration Challenges and Considerations

Migrating from traditional servers to serverless architecture presents challenges including application refactoring for stateless design, managing cold start latency, and ensuring secure, scalable function deployment. Key considerations involve evaluating vendor lock-in risks, adapting monitoring tools for ephemeral functions, and reconfiguring legacy workflows to align with event-driven models. Proper planning around data migration, compliance requirements, and cost optimization strategies is essential for a successful transition.

Future Trends in Serverless and Traditional Server Technologies

Future trends in serverless technology emphasize enhanced scalability, reduced latency, and deeper integration with AI-driven automation, enabling developers to deploy applications without managing underlying infrastructure. Traditional server technologies are evolving with advances in edge computing, hybrid cloud architectures, and improved hardware efficiency to support complex workloads and maintain greater control over data security. The convergence of serverless and traditional models fosters flexible, cost-effective solutions that optimize performance while adapting to dynamic business requirements.

Serverless vs Traditional server Infographic

difterm.com

difterm.com