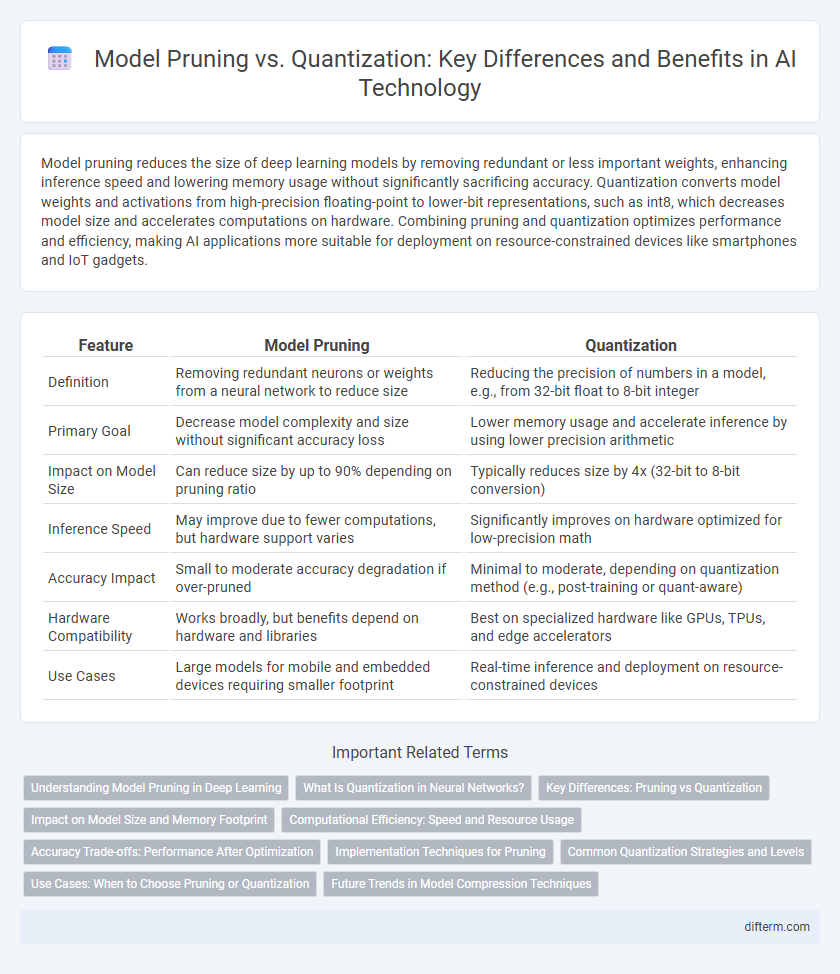

Model pruning reduces the size of deep learning models by removing redundant or less important weights, enhancing inference speed and lowering memory usage without significantly sacrificing accuracy. Quantization converts model weights and activations from high-precision floating-point to lower-bit representations, such as int8, which decreases model size and accelerates computations on hardware. Combining pruning and quantization optimizes performance and efficiency, making AI applications more suitable for deployment on resource-constrained devices like smartphones and IoT gadgets.

Table of Comparison

| Feature | Model Pruning | Quantization |

|---|---|---|

| Definition | Removing redundant neurons or weights from a neural network to reduce size | Reducing the precision of numbers in a model, e.g., from 32-bit float to 8-bit integer |

| Primary Goal | Decrease model complexity and size without significant accuracy loss | Lower memory usage and accelerate inference by using lower precision arithmetic |

| Impact on Model Size | Can reduce size by up to 90% depending on pruning ratio | Typically reduces size by 4x (32-bit to 8-bit conversion) |

| Inference Speed | May improve due to fewer computations, but hardware support varies | Significantly improves on hardware optimized for low-precision math |

| Accuracy Impact | Small to moderate accuracy degradation if over-pruned | Minimal to moderate, depending on quantization method (e.g., post-training or quant-aware) |

| Hardware Compatibility | Works broadly, but benefits depend on hardware and libraries | Best on specialized hardware like GPUs, TPUs, and edge accelerators |

| Use Cases | Large models for mobile and embedded devices requiring smaller footprint | Real-time inference and deployment on resource-constrained devices |

Understanding Model Pruning in Deep Learning

Model pruning in deep learning involves reducing the number of parameters in neural networks by removing less important weights, which enhances model efficiency and decreases memory usage without significantly sacrificing accuracy. Techniques such as magnitude-based pruning eliminate connections with minimal impact on output, enabling faster inference and lower computational costs. This method is essential for deploying deep learning models on resource-constrained devices while maintaining robust performance.

What Is Quantization in Neural Networks?

Quantization in neural networks reduces model size and inference latency by converting high-precision weights and activations into lower-precision formats such as INT8 or FP16 without significantly compromising accuracy. This technique enables deployment of deep learning models on resource-constrained devices like mobile phones and embedded systems by optimizing memory usage and computational efficiency. Quantization methods include post-training quantization and quantization-aware training, both designed to maintain model performance while enhancing execution speed.

Key Differences: Pruning vs Quantization

Model pruning reduces the size of neural networks by removing redundant or less important weights, effectively creating sparsity and improving computational efficiency. Quantization, on the other hand, compresses models by lowering the precision of weights and activations, such as converting 32-bit floating point numbers to 8-bit integers, which decreases memory usage and accelerates inference. While pruning targets model structure and connectivity, quantization focuses on numerical representation, both aiming to optimize deep learning models for deployment on resource-constrained devices.

Impact on Model Size and Memory Footprint

Model pruning reduces model size by removing redundant or less important parameters, leading to a sparser network that occupies less memory and accelerates inference. Quantization decreases memory footprint by representing weights and activations with lower precision data types, such as int8 instead of float32, which significantly compresses the model size and improves runtime efficiency. Combining pruning and quantization can yield a compact model with minimal accuracy loss, optimizing both storage requirements and execution speed on resource-constrained devices.

Computational Efficiency: Speed and Resource Usage

Model pruning reduces computational overhead by eliminating redundant neurons or weights, directly decreasing the number of operations and memory usage, thus speeding up inference on limited hardware. Quantization compresses model parameters by lowering numerical precision, which not only reduces the model size but also allows faster arithmetic computations on specialized processors like GPUs and TPUs. Both techniques enhance computational efficiency, but pruning primarily cuts down the compute load by reducing model complexity, while quantization optimizes resource utilization through smaller data representations and accelerated calculation.

Accuracy Trade-offs: Performance After Optimization

Model pruning reduces network size by eliminating redundant parameters, often preserving accuracy with a modest drop of 1-3%, while quantization compresses models by lowering precision, sometimes causing a larger accuracy reduction up to 5%. Pruning maintains performance in tasks with high parameter redundancy, whereas quantization excels in deploying models on edge devices with limited computation and memory. Balancing pruning's minimal accuracy loss against quantization's efficiency gains is critical for optimizing model performance after deployment.

Implementation Techniques for Pruning

Model pruning implementation techniques focus on structured pruning, which removes entire neurons or channels, and unstructured pruning, targeting individual weights to create sparse networks. Iterative pruning combined with retraining helps maintain model accuracy by gradually eliminating less significant parameters based on magnitude or importance scores. Hardware-aware pruning considers the deployment platform's constraints to optimize speed and efficiency without compromising performance.

Common Quantization Strategies and Levels

Common quantization strategies in technology include uniform and non-uniform quantization, which differ in step size allocation to represent model weights more efficiently. Quantization levels often range from 8-bit to lower bit-widths like 4-bit or even binary, significantly reducing model size and computational requirements without heavily impacting accuracy. These approaches optimize neural network performance in edge devices by balancing precision and resource constraints.

Use Cases: When to Choose Pruning or Quantization

Model pruning is ideal for use cases requiring reduced model size with minimal accuracy loss, such as deploying deep neural networks on edge devices with limited memory. Quantization suits scenarios demanding faster inference and lower power consumption, especially in real-time applications like mobile AI and IoT devices. Combining pruning and quantization can optimize model efficiency in complex environments needing both compact storage and high-speed execution.

Future Trends in Model Compression Techniques

Future trends in model compression techniques emphasize hybrid approaches combining pruning and quantization to maximize efficiency without sacrificing accuracy. Advances in neural architecture search and automated machine learning are driving more adaptive, context-aware compression strategies. Research is also exploring dynamic, layer-wise compression tailored to specific hardware constraints, enabling deployment of larger models on edge devices.

Model pruning vs Quantization Infographic

difterm.com

difterm.com