CPU pinning and NUMA balancing are critical techniques in optimizing performance for technology pet applications by managing processor affinity and memory access. CPU pinning assigns specific CPU cores to processes, reducing context switching and cache misses, while NUMA balancing dynamically distributes memory and processor tasks across nodes to optimize latency and bandwidth. Effective use of CPU pinning combined with NUMA balancing ensures improved resource allocation, minimizing latency and maximizing throughput in high-demand computing environments.

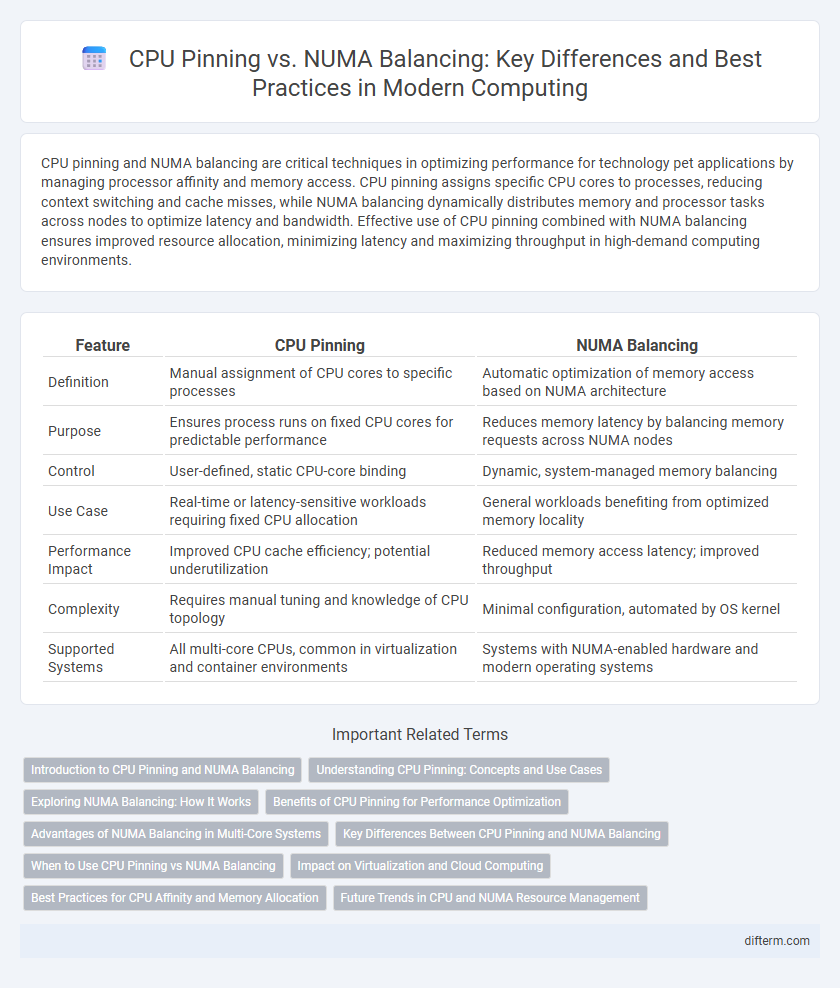

Table of Comparison

| Feature | CPU Pinning | NUMA Balancing |

|---|---|---|

| Definition | Manual assignment of CPU cores to specific processes | Automatic optimization of memory access based on NUMA architecture |

| Purpose | Ensures process runs on fixed CPU cores for predictable performance | Reduces memory latency by balancing memory requests across NUMA nodes |

| Control | User-defined, static CPU-core binding | Dynamic, system-managed memory balancing |

| Use Case | Real-time or latency-sensitive workloads requiring fixed CPU allocation | General workloads benefiting from optimized memory locality |

| Performance Impact | Improved CPU cache efficiency; potential underutilization | Reduced memory access latency; improved throughput |

| Complexity | Requires manual tuning and knowledge of CPU topology | Minimal configuration, automated by OS kernel |

| Supported Systems | All multi-core CPUs, common in virtualization and container environments | Systems with NUMA-enabled hardware and modern operating systems |

Introduction to CPU Pinning and NUMA Balancing

CPU pinning assigns specific processor cores to individual processes, improving cache utilization and reducing latency in multicore systems. NUMA balancing dynamically distributes memory allocation and process execution across Non-Uniform Memory Access nodes to optimize system performance and memory access times. Both techniques enhance multicore processing efficiency, but CPU pinning offers static control while NUMA balancing adapts to runtime workloads.

Understanding CPU Pinning: Concepts and Use Cases

CPU pinning binds specific processes or threads to designated CPU cores, minimizing context switches and cache misses for enhanced performance in multi-core systems. It is particularly effective in latency-sensitive applications such as real-time data processing, high-frequency trading, and virtualization environments. Understanding the trade-offs between CPU pinning and NUMA balancing is crucial for optimizing resource allocation and achieving low-latency execution on NUMA architectures.

Exploring NUMA Balancing: How It Works

NUMA balancing optimizes memory access by dynamically migrating processes and memory pages closer to the CPU nodes where they execute, reducing latency and improving performance in multi-node systems. It monitors workload distribution and memory locality to ensure efficient utilization of NUMA architectures, minimizing cross-node memory traffic. Compared to CPU pinning, which statically binds processes to specific CPUs, NUMA balancing provides flexibility and adaptability for varying workloads and system states.

Benefits of CPU Pinning for Performance Optimization

CPU pinning enhances performance optimization by assigning specific processes to designated CPU cores, reducing context switching and cache misses. This targeted resource allocation improves data locality and minimizes latency in multi-core systems. By stabilizing workload distribution, CPU pinning ensures efficient utilization of processor resources, especially in NUMA architectures where memory access times vary.

Advantages of NUMA Balancing in Multi-Core Systems

NUMA balancing optimizes memory access by dynamically distributing processes and memory allocation across NUMA nodes, reducing latency and improving bandwidth utilization in multi-core systems. It enhances system performance by minimizing cross-node memory access penalties compared to static CPU pinning. This dynamic adjustment leads to better load balancing and overall efficiency in NUMA-enabled architectures.

Key Differences Between CPU Pinning and NUMA Balancing

CPU pinning assigns specific tasks or processes to designated CPU cores to enhance performance by reducing context switching and cache misses, while NUMA balancing dynamically optimizes memory access by relocating processes closer to the memory node they frequently use. CPU pinning offers predictable performance in real-time and latency-sensitive applications, whereas NUMA balancing improves overall system efficiency by minimizing cross-node memory access latency. The key difference lies in CPU pinning's focus on fixed core assignment versus NUMA balancing's adaptive memory locality optimization.

When to Use CPU Pinning vs NUMA Balancing

CPU pinning is ideal for latency-sensitive applications requiring dedicated core access, such as real-time computing or high-frequency trading, ensuring predictable CPU resource allocation. NUMA balancing optimizes memory affinity across CPU sockets dynamically, improving performance for workloads with variable memory access patterns like large-scale database processing or virtualization. Choose CPU pinning when precise CPU control outweighs flexibility, while NUMA balancing suits environments demanding adaptive memory locality and load distribution.

Impact on Virtualization and Cloud Computing

CPU pinning enhances virtualization performance by binding virtual CPUs to specific physical cores, reducing latency and improving cache utilization in cloud computing environments. NUMA balancing dynamically optimizes memory access by distributing workloads across Non-Uniform Memory Access nodes, boosting efficiency in multi-socket server architectures. Both techniques influence resource allocation strategies, with CPU pinning favoring predictable performance and NUMA balancing supporting flexible, adaptive workload management in virtualized cloud infrastructures.

Best Practices for CPU Affinity and Memory Allocation

CPU pinning ensures consistent processor affinity by binding processes to specific cores, reducing latency and improving cache utilization in NUMA systems. NUMA balancing dynamically allocates memory close to the executing CPU, optimizing memory access and preventing bottlenecks caused by remote memory access. Combining static CPU pinning with intelligent NUMA balancing achieves optimal performance, especially in latency-sensitive and high-throughput applications.

Future Trends in CPU and NUMA Resource Management

Emerging trends in CPU pinning and NUMA balancing emphasize adaptive resource management to enhance performance in multi-core and multi-socket architectures. Advances in machine learning enable dynamic optimization of CPU allocation and memory locality, reducing latency while maximizing throughput. Future systems are expected to integrate predictive NUMA scheduling with real-time workload analysis to deliver unprecedented efficiency in heterogeneous computing environments.

CPU pinning vs NUMA balancing Infographic

difterm.com

difterm.com