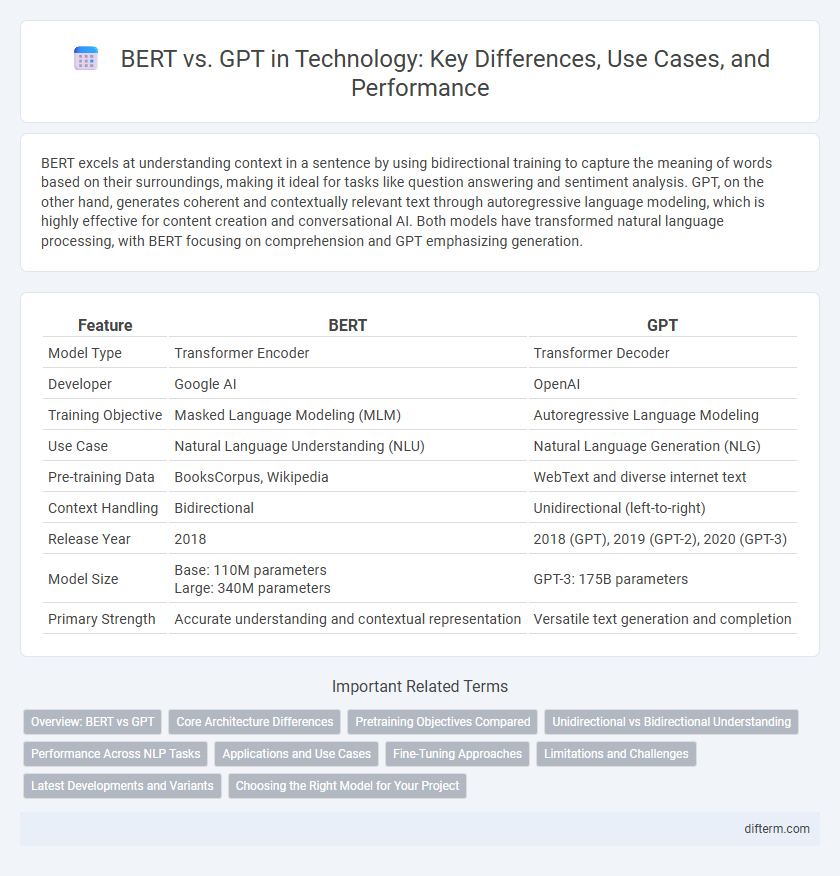

BERT excels at understanding context in a sentence by using bidirectional training to capture the meaning of words based on their surroundings, making it ideal for tasks like question answering and sentiment analysis. GPT, on the other hand, generates coherent and contextually relevant text through autoregressive language modeling, which is highly effective for content creation and conversational AI. Both models have transformed natural language processing, with BERT focusing on comprehension and GPT emphasizing generation.

Table of Comparison

| Feature | BERT | GPT |

|---|---|---|

| Model Type | Transformer Encoder | Transformer Decoder |

| Developer | Google AI | OpenAI |

| Training Objective | Masked Language Modeling (MLM) | Autoregressive Language Modeling |

| Use Case | Natural Language Understanding (NLU) | Natural Language Generation (NLG) |

| Pre-training Data | BooksCorpus, Wikipedia | WebText and diverse internet text |

| Context Handling | Bidirectional | Unidirectional (left-to-right) |

| Release Year | 2018 | 2018 (GPT), 2019 (GPT-2), 2020 (GPT-3) |

| Model Size | Base: 110M parameters Large: 340M parameters |

GPT-3: 175B parameters |

| Primary Strength | Accurate understanding and contextual representation | Versatile text generation and completion |

Overview: BERT vs GPT

BERT (Bidirectional Encoder Representations from Transformers) excels in understanding context within text by analyzing words bidirectionally, making it highly effective for tasks like question answering and named entity recognition. GPT (Generative Pre-trained Transformer), designed as a unidirectional decoder, specializes in generating coherent and contextually relevant text, powering applications such as chatbots and creative writing. BERT's strength lies in comprehension and interpretation, while GPT's architecture is optimized for natural language generation and predictive text.

Core Architecture Differences

BERT utilizes a bidirectional transformer encoder architecture, enabling it to understand context from both left and right simultaneously, which excels in tasks like masked language modeling and next sentence prediction. In contrast, GPT employs a unidirectional transformer decoder that processes text sequentially from left to right, optimizing it for generative tasks such as text completion and language generation. The core difference lies in BERT's bidirectional attention mechanism versus GPT's autoregressive approach, impacting their respective strengths in comprehension versus generation.

Pretraining Objectives Compared

BERT utilizes the Masked Language Model (MLM) pretraining objective by predicting randomly masked tokens within a sentence, enhancing bidirectional context understanding. GPT employs an autoregressive language modeling approach, predicting the next token in a sequence, which optimizes performance for generative tasks. The contrasting pretraining objectives result in BERT excelling at understanding context for tasks like classification, while GPT is optimized for text generation and continuation.

Unidirectional vs Bidirectional Understanding

BERT employs bidirectional understanding by analyzing context from both left and right directions simultaneously, enhancing comprehension of ambiguous language in natural language processing tasks. GPT utilizes a unidirectional approach, processing text sequentially from left to right, which excels in text generation but limits context awareness compared to BERT. This fundamental difference impacts their effectiveness in tasks requiring deep contextual understanding versus coherent text production.

Performance Across NLP Tasks

BERT excels in understanding context through bidirectional encoding, making it highly effective for tasks like question answering and named entity recognition with superior accuracy. GPT's strength lies in generative language modeling, enabling fluent text generation and strong performance in tasks such as summarization and conversational AI. Benchmark evaluations demonstrate BERT's advantage in tasks requiring contextual comprehension, while GPT leads in coherence and creativity for generative applications.

Applications and Use Cases

BERT excels in natural language understanding tasks such as sentiment analysis, question answering, and named entity recognition due to its bidirectional context processing. GPT, with its generative capabilities, is widely applied in content creation, language translation, and conversational AI, enabling coherent and contextually rich text generation. Both models enhance applications in virtual assistants, search engines, and automated customer support by leveraging their strengths in comprehension and generation respectively.

Fine-Tuning Approaches

BERT fine-tuning customizes the model for specific tasks by adjusting its bidirectional transformer layers with labeled datasets, optimizing performance in natural language understanding tasks like question answering or sentiment analysis. GPT fine-tuning involves adapting its generative transformer architecture by training on task-specific text corpora, enhancing capabilities in text completion and generation. Both models utilize transfer learning but differ in their approach: BERT emphasizes bidirectional context representation, while GPT focuses on autoregressive language generation.

Limitations and Challenges

BERT's bidirectional architecture excels in understanding context but struggles with generating coherent long-form text, limiting its use in creative content creation. GPT, while powerful in text generation, often faces challenges related to factual accuracy and generating biased or nonsensical outputs due to its unidirectional training. Both models demand substantial computational resources and large datasets, presenting barriers for real-time applications and resource-constrained environments.

Latest Developments and Variants

BERT's latest developments include the introduction of variants like RoBERTa and ALBERT, which enhance pre-training techniques and model efficiency for improved natural language understanding. GPT's recent advancements feature GPT-4, showcasing multimodal capabilities and increased context window size, enabling more coherent and context-aware text generation. These cutting-edge models continue to push the boundaries of transformer architectures in natural language processing applications.

Choosing the Right Model for Your Project

BERT excels in understanding context within sentences, making it ideal for tasks like sentiment analysis, question answering, and named entity recognition that require deep comprehension of language nuances. GPT models are designed for generating coherent and contextually relevant text, suitable for content creation, chatbots, and language translation requiring fluid and creative output. Selecting between BERT and GPT depends on whether your project prioritizes interpretative understanding or generative capabilities, with factors like dataset size, computational resources, and application goals guiding the optimal choice.

BERT vs GPT Infographic

difterm.com

difterm.com