Bandwidth determines the amount of data that can be transmitted over a network in a given time, while latency measures the delay before data transfer begins. Low latency is crucial for real-time applications like video calls and online gaming, where quick response times improve user experience. High bandwidth supports larger data volumes and faster download speeds but does not compensate for latency in time-sensitive tasks.

Table of Comparison

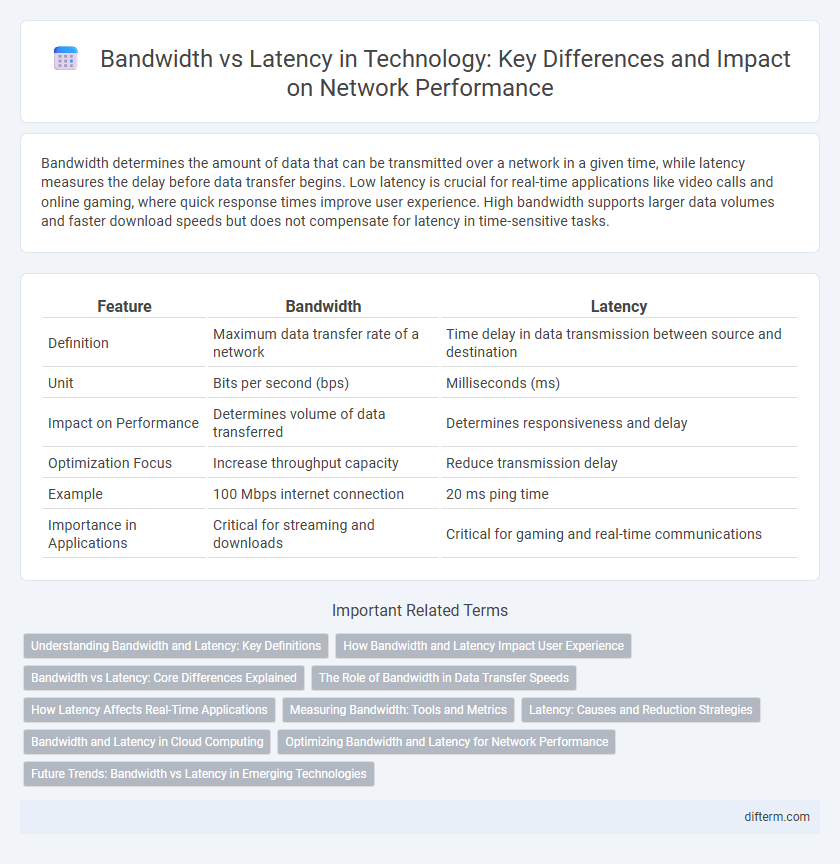

| Feature | Bandwidth | Latency |

|---|---|---|

| Definition | Maximum data transfer rate of a network | Time delay in data transmission between source and destination |

| Unit | Bits per second (bps) | Milliseconds (ms) |

| Impact on Performance | Determines volume of data transferred | Determines responsiveness and delay |

| Optimization Focus | Increase throughput capacity | Reduce transmission delay |

| Example | 100 Mbps internet connection | 20 ms ping time |

| Importance in Applications | Critical for streaming and downloads | Critical for gaming and real-time communications |

Understanding Bandwidth and Latency: Key Definitions

Bandwidth refers to the maximum data transfer rate of a network or internet connection, typically measured in megabits per second (Mbps), indicating the capacity to handle large volumes of data simultaneously. Latency measures the delay before a transfer of data begins following an instruction, usually expressed in milliseconds (ms), affecting the responsiveness of applications like gaming and video conferencing. Understanding the distinction between bandwidth and latency is crucial for optimizing network performance and ensuring seamless user experience in technology systems.

How Bandwidth and Latency Impact User Experience

Higher bandwidth enables faster data transfer rates, allowing users to stream high-definition videos and download large files smoothly. Low latency reduces the delay between a user's action and the system's response, which is critical for real-time applications like online gaming and video conferencing. Both sufficient bandwidth and minimal latency are essential to ensure seamless, responsive, and high-quality digital experiences.

Bandwidth vs Latency: Core Differences Explained

Bandwidth measures the maximum data transfer rate of a network, indicating how much information can be sent over a connection per second, typically expressed in Mbps or Gbps. Latency refers to the time delay between a data packet being sent and received, measured in milliseconds, impacting real-time application performance. Understanding bandwidth versus latency is crucial for optimizing networking solutions, as high bandwidth supports large data volumes while low latency ensures fast response times in gaming, streaming, and cloud computing.

The Role of Bandwidth in Data Transfer Speeds

Bandwidth determines the maximum amount of data that can be transmitted over a network connection in a given time, directly influencing data transfer speeds. High bandwidth allows for larger volumes of data to move simultaneously, essential for streaming high-definition video, online gaming, and cloud computing services. While latency affects the delay in data transfer, bandwidth primarily governs the capacity and speed at which data travels across digital networks.

How Latency Affects Real-Time Applications

Latency critically impacts real-time applications such as video conferencing, online gaming, and VoIP by causing delays that disrupt synchronization and user experience. Even with high bandwidth, increased latency results in lag, audio dropouts, and frame freezing, undermining the effectiveness of these interactive technologies. Minimizing latency is essential for maintaining seamless communication and responsiveness in real-time digital environments.

Measuring Bandwidth: Tools and Metrics

Bandwidth measurement relies on tools such as iPerf, Speedtest, and NetFlow to analyze data transfer rates in Mbps or Gbps, providing clear insight into network capacity. Metrics like throughput, data transfer speed, and packet loss rate help evaluate the efficiency of bandwidth under varying conditions. Accurate bandwidth measurement enables optimization of network performance and informs decisions for infrastructure upgrades.

Latency: Causes and Reduction Strategies

Latency, the time delay in data transmission, is primarily caused by factors such as signal propagation distance, network congestion, and processing delays at routers and switches. Strategies to reduce latency include using edge computing to process data closer to the source, optimizing routing paths, and deploying faster hardware like low-latency switches and fiber-optic cables. Minimizing latency is critical for real-time applications such as online gaming, video conferencing, and autonomous vehicle communication.

Bandwidth and Latency in Cloud Computing

Bandwidth in cloud computing determines the volume of data that can be transferred between clients and servers within a specific time frame, directly impacting the speed of data-intensive applications. Latency measures the delay before data begins to transfer after a request is made, influencing the responsiveness of cloud services, especially in real-time applications. Optimizing both bandwidth and latency is crucial for enhancing cloud performance, ensuring efficient data synchronization, and delivering seamless user experiences.

Optimizing Bandwidth and Latency for Network Performance

Optimizing bandwidth and latency is crucial for enhancing network performance, as bandwidth determines the data transfer capacity while latency impacts the speed of data delivery. Techniques such as traffic shaping, Quality of Service (QoS) policies, and the deployment of edge computing can effectively balance bandwidth utilization and reduce latency. Implementing efficient routing protocols and upgrading infrastructure to support higher throughput also play significant roles in minimizing delays and maximizing network efficiency.

Future Trends: Bandwidth vs Latency in Emerging Technologies

Emerging technologies such as 5G, edge computing, and augmented reality emphasize reducing latency to enhance real-time responsiveness, while advances in fiber optics and satellite internet continue to push bandwidth capabilities to new heights. Quantum networking experiments signal a future where latency approaches near-zero, fundamentally changing data transmission speeds. Balancing ultra-low latency with expanding bandwidth remains critical for applications like autonomous vehicles and immersive VR experiences.

bandwidth vs latency Infographic

difterm.com

difterm.com