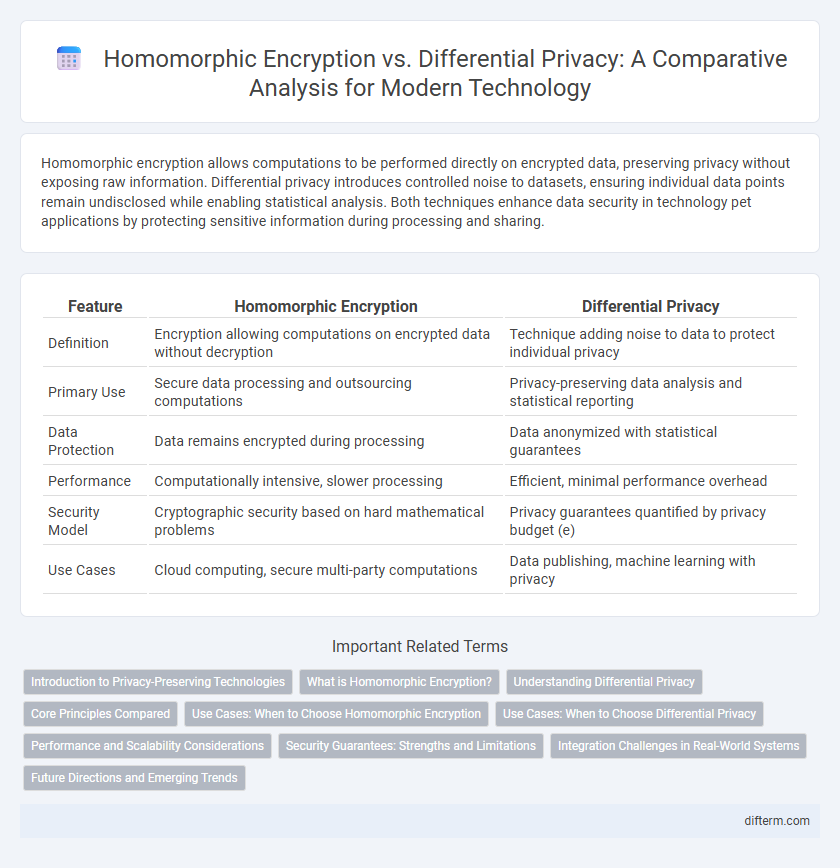

Homomorphic encryption allows computations to be performed directly on encrypted data, preserving privacy without exposing raw information. Differential privacy introduces controlled noise to datasets, ensuring individual data points remain undisclosed while enabling statistical analysis. Both techniques enhance data security in technology pet applications by protecting sensitive information during processing and sharing.

Table of Comparison

| Feature | Homomorphic Encryption | Differential Privacy |

|---|---|---|

| Definition | Encryption allowing computations on encrypted data without decryption | Technique adding noise to data to protect individual privacy |

| Primary Use | Secure data processing and outsourcing computations | Privacy-preserving data analysis and statistical reporting |

| Data Protection | Data remains encrypted during processing | Data anonymized with statistical guarantees |

| Performance | Computationally intensive, slower processing | Efficient, minimal performance overhead |

| Security Model | Cryptographic security based on hard mathematical problems | Privacy guarantees quantified by privacy budget (e) |

| Use Cases | Cloud computing, secure multi-party computations | Data publishing, machine learning with privacy |

Introduction to Privacy-Preserving Technologies

Homomorphic encryption allows computations on encrypted data without revealing the underlying information, enabling secure data processing in cloud environments. Differential privacy introduces carefully calibrated noise to datasets, ensuring individual data points remain indistinguishable while providing accurate aggregate insights. Both technologies play critical roles in enhancing data privacy and security in the era of big data and AI-driven applications.

What is Homomorphic Encryption?

Homomorphic encryption is a cryptographic technique that enables computations on encrypted data without decrypting it, preserving data confidentiality throughout processing. This method supports complex operations such as addition and multiplication on ciphertexts, producing encrypted results that, when decrypted, match the outcome of operations performed on the original plaintext. It is crucial for secure data analysis in cloud computing, allowing privacy-preserving outsourcing of computations while maintaining strict data security.

Understanding Differential Privacy

Differential Privacy ensures user data protection by adding calibrated noise to datasets, enabling statistical analysis without revealing individual information. It provides quantifiable privacy guarantees, measured by privacy budget parameters such as epsilon, which control the trade-off between data utility and privacy. Unlike Homomorphic Encryption, which secures data during computation, Differential Privacy focuses on preventing re-identification risks in released data outputs.

Core Principles Compared

Homomorphic encryption enables computation on encrypted data without decrypting it, preserving data confidentiality while allowing meaningful analysis. Differential privacy injects carefully calibrated noise into datasets or query results to protect individual privacy by preventing re-identification. Both techniques maintain data security but operate on fundamentally different principles: homomorphic encryption focuses on secure computation, whereas differential privacy emphasizes statistical privacy guarantees.

Use Cases: When to Choose Homomorphic Encryption

Homomorphic encryption is ideal for use cases requiring secure computation on encrypted data, such as privacy-preserving machine learning, secure voting systems, and confidential financial analysis. It enables data processing without revealing sensitive information, making it suitable for healthcare data sharing and cloud computing scenarios where data confidentiality is critical. Organizations should choose homomorphic encryption when the priority is to perform computations on sensitive data while maintaining end-to-end encryption.

Use Cases: When to Choose Differential Privacy

Differential privacy is ideal for scenarios requiring statistical analysis on aggregated data without exposing individual information, such as in large-scale census data or user behavior analytics. It ensures privacy by adding controlled noise to datasets, enabling secure sharing across sectors like healthcare and finance while maintaining data utility. Unlike homomorphic encryption, which secures computations on encrypted data, differential privacy excels when releasing summarized insights rather than performing complex encrypted data processing.

Performance and Scalability Considerations

Homomorphic encryption offers strong data security by enabling computations on encrypted data but faces significant performance bottlenecks due to high computational overhead and increased resource consumption, limiting its scalability in real-time applications. Differential privacy provides scalable solutions for large datasets by injecting controlled noise to preserve privacy, achieving faster processing speeds with lower computational demands but with trade-offs in accuracy and data utility. Organizations must balance the computational intensity of homomorphic encryption against the efficiency and approximate nature of differential privacy when designing privacy-preserving technologies for scalable, high-performance environments.

Security Guarantees: Strengths and Limitations

Homomorphic encryption ensures data confidentiality by enabling computations on encrypted data without exposing raw inputs, providing strong security guarantees against data breaches. Differential privacy protects individual information by adding controlled noise to query results, effectively preventing re-identification risks in aggregated datasets. However, homomorphic encryption faces challenges in computational efficiency while differential privacy's noise injection can reduce data utility, highlighting trade-offs in privacy-preserving technologies.

Integration Challenges in Real-World Systems

Integration challenges in real-world systems arise due to the computational complexity of homomorphic encryption, which requires significant processing power and can introduce latency in data workflows. Differential privacy faces difficulties in tuning privacy parameters to balance data utility with privacy guarantees, often demanding extensive customization for diverse datasets. Combining both techniques complicates system design, as developers must address interoperability issues, performance trade-offs, and compliance with regulatory standards across heterogeneous platforms.

Future Directions and Emerging Trends

Homomorphic encryption is advancing toward more efficient schemes that enable complex computations on encrypted data with reduced latency and resource consumption, crucial for secure cloud computing and AI applications. Differential privacy is evolving through adaptive algorithms that balance data utility and privacy by dynamically tuning noise parameters based on real-time data sensitivity. The convergence of these technologies alongside federated learning and secure multi-party computation promises robust privacy-preserving frameworks for next-generation data analytics and intelligent systems.

Homomorphic Encryption vs Differential Privacy Infographic

difterm.com

difterm.com