Edge computing processes data directly on devices or local edge nodes to reduce latency and bandwidth usage, enabling real-time responses. Fog computing extends this concept by creating a distributed network of intermediate nodes between edge devices and the cloud, enhancing data management and scalability. Both technologies optimize data processing closer to the source but differ in architecture and deployment, with fog computing offering a hierarchical approach for broader network integration.

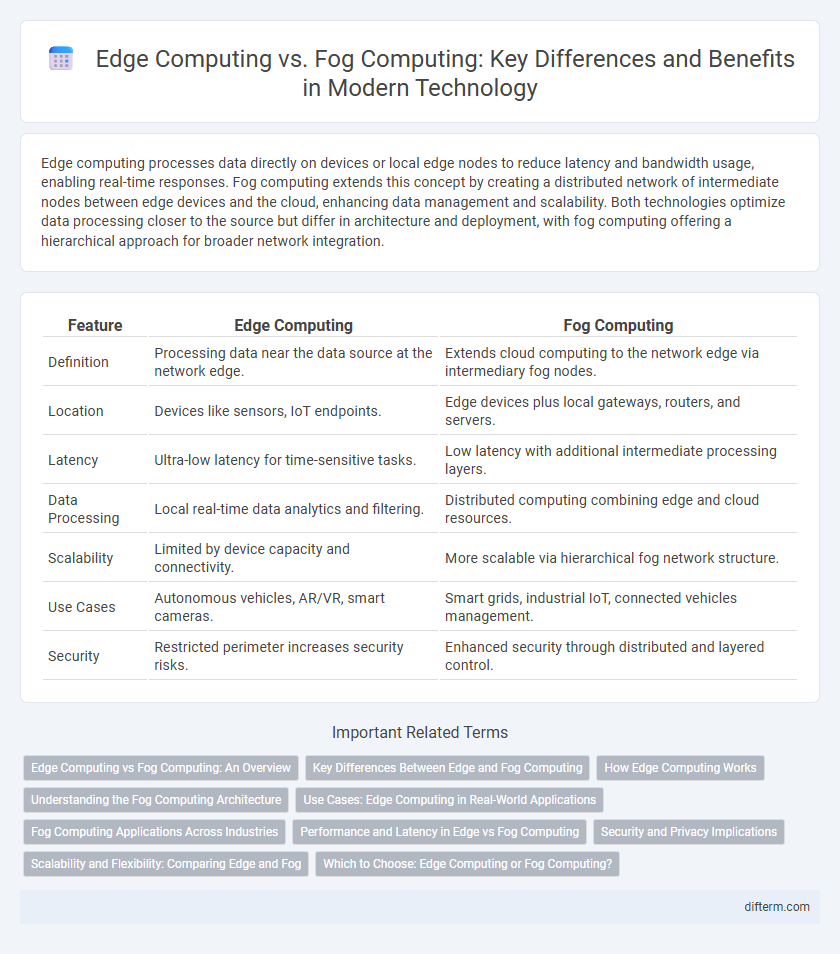

Table of Comparison

| Feature | Edge Computing | Fog Computing |

|---|---|---|

| Definition | Processing data near the data source at the network edge. | Extends cloud computing to the network edge via intermediary fog nodes. |

| Location | Devices like sensors, IoT endpoints. | Edge devices plus local gateways, routers, and servers. |

| Latency | Ultra-low latency for time-sensitive tasks. | Low latency with additional intermediate processing layers. |

| Data Processing | Local real-time data analytics and filtering. | Distributed computing combining edge and cloud resources. |

| Scalability | Limited by device capacity and connectivity. | More scalable via hierarchical fog network structure. |

| Use Cases | Autonomous vehicles, AR/VR, smart cameras. | Smart grids, industrial IoT, connected vehicles management. |

| Security | Restricted perimeter increases security risks. | Enhanced security through distributed and layered control. |

Edge Computing vs Fog Computing: An Overview

Edge computing processes data directly on or near IoT devices, reducing latency and bandwidth usage by minimizing data transmission to centralized cloud servers. Fog computing extends this concept by creating a hierarchical network of distributed nodes between the edge and cloud, enabling enhanced computing, storage, and networking services closer to end devices. Key differences include edge computing's focus on processing at the device level, while fog computing offers intermediary layers to optimize data management and real-time analytics across complex IoT environments.

Key Differences Between Edge and Fog Computing

Edge computing processes data directly on devices or local nodes near the data source, minimizing latency and bandwidth use. Fog computing extends this by creating a hierarchical network of edge devices and local data centers, enabling distributed processing and improved scalability. Key differences include deployment scope, where edge is device-centric, and fog involves multiple layers for data management and analytics.

How Edge Computing Works

Edge computing processes data near the source of generation, reducing latency by handling computation and storage tasks directly on edge devices such as sensors, smartphones, or local edge servers. This decentralized approach enables real-time data analysis by minimizing the need to transmit data to centralized cloud data centers, thereby improving response times and bandwidth efficiency. Edge devices leverage distributed computing and advanced analytics to support applications like autonomous vehicles, IoT, and industrial automation, distinguishing edge computing from fog computing, which extends cloud capabilities through intermediate nodes.

Understanding the Fog Computing Architecture

Fog computing architecture extends cloud capabilities by distributing storage, computing, and networking resources closer to data sources, enabling real-time processing and reduced latency. It consists of multiple layers, including edge devices, fog nodes, and cloud data centers, facilitating seamless data management and analytics. This hierarchical architecture supports diverse IoT applications by improving scalability, reliability, and security compared to traditional centralized cloud models.

Use Cases: Edge Computing in Real-World Applications

Edge computing powers real-time data processing in autonomous vehicles by minimizing latency and enhancing decision-making accuracy. Smart cities leverage edge infrastructure to monitor traffic, energy consumption, and public safety through localized sensor analytics. Industrial IoT systems deploy edge devices to ensure rapid fault detection and predictive maintenance, optimizing operational efficiency.

Fog Computing Applications Across Industries

Fog computing extends cloud capabilities by processing data closer to the source, enabling real-time analytics and reduced latency in industries like manufacturing, healthcare, and smart cities. It supports applications such as predictive maintenance, remote patient monitoring, and traffic management by distributing computation across decentralized nodes. This localized processing enhances data security, bandwidth efficiency, and responsiveness critical for IoT-driven environments.

Performance and Latency in Edge vs Fog Computing

Edge computing processes data closer to the data source, significantly reducing latency by minimizing the distance data travels and enabling real-time decision-making in applications such as autonomous vehicles and industrial automation. Fog computing extends this capability by distributing resources across multiple nodes between the edge and the cloud, which can introduce slightly higher latency but provides enhanced data aggregation and processing power for large-scale IoT deployments. Performance in edge computing excels in low-latency scenarios due to its proximity to devices, while fog computing balances computational load and scalability with moderate latency.

Security and Privacy Implications

Edge computing enhances security by processing data locally on devices, reducing exposure to centralized cloud vulnerabilities and minimizing data transmission risks. Fog computing extends security controls closer to the network edge through distributed nodes, enabling real-time threat detection and improved privacy management across IoT ecosystems. Both paradigms demand robust encryption, authentication, and access control mechanisms to effectively address data integrity and confidentiality challenges in decentralized architectures.

Scalability and Flexibility: Comparing Edge and Fog

Edge computing offers high scalability by processing data near the source, reducing latency and bandwidth usage, which is essential for real-time applications. Fog computing extends this by providing a hierarchical layer between the cloud and edge devices, enhancing flexibility in resource allocation and management across diverse network environments. Both architectures support scalability, but fog computing excels in coordinating complex, distributed networks with dynamic resource demands.

Which to Choose: Edge Computing or Fog Computing?

Edge computing processes data directly on devices or local nodes, reducing latency and bandwidth use for real-time applications like autonomous vehicles or smart sensors. Fog computing extends cloud capabilities closer to the network edge by using intermediary nodes for data processing, enabling better scalability and security for complex IoT ecosystems. Choosing between edge and fog computing depends on factors such as required latency, data volume, network infrastructure, and the complexity of the deployed IoT environment.

edge computing vs fog computing Infographic

difterm.com

difterm.com