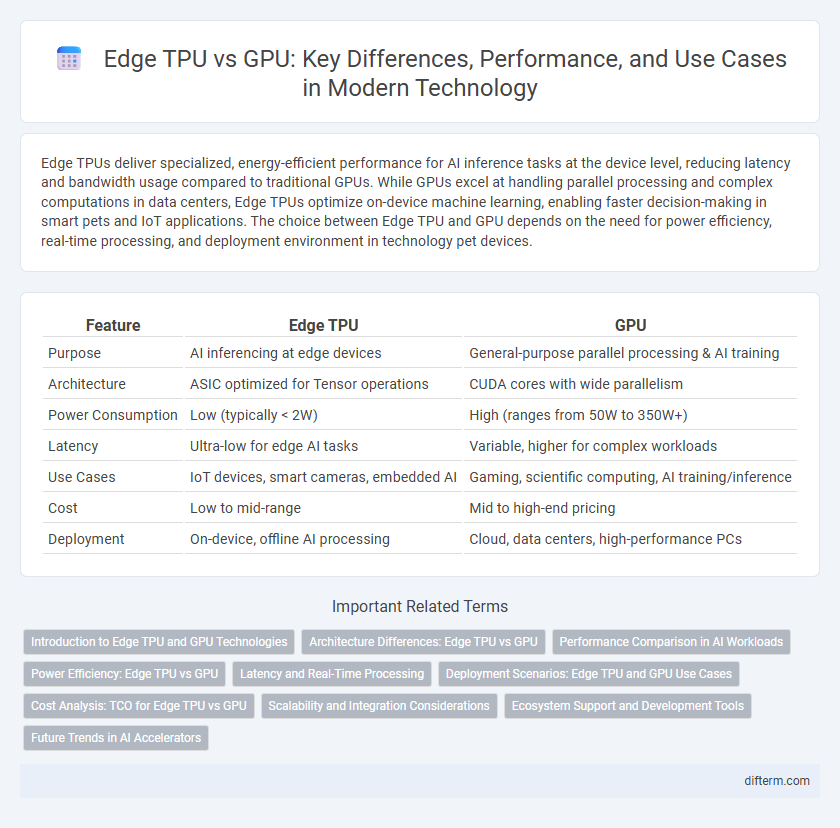

Edge TPUs deliver specialized, energy-efficient performance for AI inference tasks at the device level, reducing latency and bandwidth usage compared to traditional GPUs. While GPUs excel at handling parallel processing and complex computations in data centers, Edge TPUs optimize on-device machine learning, enabling faster decision-making in smart pets and IoT applications. The choice between Edge TPU and GPU depends on the need for power efficiency, real-time processing, and deployment environment in technology pet devices.

Table of Comparison

| Feature | Edge TPU | GPU |

|---|---|---|

| Purpose | AI inferencing at edge devices | General-purpose parallel processing & AI training |

| Architecture | ASIC optimized for Tensor operations | CUDA cores with wide parallelism |

| Power Consumption | Low (typically < 2W) | High (ranges from 50W to 350W+) |

| Latency | Ultra-low for edge AI tasks | Variable, higher for complex workloads |

| Use Cases | IoT devices, smart cameras, embedded AI | Gaming, scientific computing, AI training/inference |

| Cost | Low to mid-range | Mid to high-end pricing |

| Deployment | On-device, offline AI processing | Cloud, data centers, high-performance PCs |

Introduction to Edge TPU and GPU Technologies

Edge TPU is a purpose-built ASIC designed by Google to accelerate machine learning inference at the edge, offering low power consumption and high efficiency for real-time AI applications. GPUs, originally developed for rendering graphics, now serve as versatile parallel processors enabling high-throughput computations in deep learning training and inference. While GPUs excel in handling large-scale, complex AI workloads in data centers, Edge TPUs provide optimized performance for on-device processing in embedded and IoT devices, reducing latency and bandwidth usage.

Architecture Differences: Edge TPU vs GPU

Edge TPU architecture is specifically designed for low-power, high-efficiency neural network inference with a focus on fixed-point arithmetic and optimized matrix multiplication, enabling rapid AI processing on edge devices. GPU architecture leverages massively parallel floating-point cores to handle a wide range of computational tasks, including graphics rendering and large-scale neural network training, offering flexibility but at higher power consumption. The Edge TPU's specialized design contrasts with the GPU's general-purpose parallel processing, resulting in differing efficiencies for on-device AI workloads.

Performance Comparison in AI Workloads

Edge TPUs deliver optimized performance for low-power, real-time AI inference tasks by accelerating specific machine learning models with high efficiency and minimal latency. GPUs offer superior versatility and higher throughput across a broad range of AI workloads, including complex deep learning training, due to their massively parallel architectures. The choice between Edge TPU and GPU depends on application requirements such as power consumption, latency constraints, and the complexity of neural network models.

Power Efficiency: Edge TPU vs GPU

Edge TPUs demonstrate superior power efficiency by performing high-speed machine learning inference tasks using as little as 1 watt of power, compared to GPUs which often require tens to hundreds of watts for similar workloads. Designed specifically for edge devices, Edge TPUs optimize performance-per-watt by integrating specialized neural network accelerators. This efficiency enables deployment of AI applications in power-constrained environments, making Edge TPUs ideal for IoT devices and mobile applications where energy consumption is critical.

Latency and Real-Time Processing

Edge TPU offers significantly lower latency compared to traditional GPUs by processing AI tasks locally on edge devices, minimizing data transfer delays. This on-device computation enables real-time processing essential for applications like autonomous vehicles and IoT sensors. GPUs excel in parallel processing power but often introduce higher latency due to centralized data handling and network communication overhead.

Deployment Scenarios: Edge TPU and GPU Use Cases

Edge TPU excels in low-power, real-time inference tasks on edge devices such as IoT sensors, smart cameras, and mobile robots, enabling efficient processing without cloud dependency. GPUs are preferred for high-throughput training and complex neural network models in data centers and cloud environments, providing extensive parallel processing capabilities. Deployment scenarios often combine Edge TPU for on-device AI acceleration with GPUs handling intensive training and batch inference, optimizing resource use and latency needs.

Cost Analysis: TCO for Edge TPU vs GPU

Edge TPU devices offer significantly lower total cost of ownership (TCO) compared to GPUs due to reduced power consumption and minimal cooling requirements, making them ideal for energy-efficient inference tasks at the edge. GPUs, while providing superior raw processing power, entail higher initial capital expenditure and ongoing operational costs such as electricity and maintenance. For large-scale deployments requiring continuous, low-latency AI processing, Edge TPU's cost efficiency substantially outweighs the investment in GPU infrastructure.

Scalability and Integration Considerations

Edge TPU offers superior scalability for deploying AI models in distributed environments due to its low power consumption and compact form factor, enabling seamless integration into edge devices. GPUs, while powerful for large-scale, parallel processing tasks, often require significant infrastructure and energy resources, limiting their scalability in constrained settings. Integration considerations favor Edge TPU for real-time, on-device inference with minimal latency, whereas GPUs are preferred for complex, high-throughput workloads in centralized data centers.

Ecosystem Support and Development Tools

Edge TPU offers a specialized ecosystem optimized for AI inference on edge devices, featuring streamlined development tools like Coral's Edge TPU Compiler and TensorFlow Lite integration for efficient model deployment. GPUs, supported by mature ecosystems such as CUDA and cuDNN, provide extensive development environments compatible with a wide range of AI frameworks including PyTorch and TensorFlow, enabling versatile model training and inference. While Edge TPU excels in low-power, real-time AI applications at the edge, GPUs deliver broader support for complex model development and high-throughput processing in cloud and data center environments.

Future Trends in AI Accelerators

Edge TPUs provide specialized, low-power AI inference capabilities optimized for on-device processing, while GPUs excel at high-throughput parallel computations for deep learning training. Future trends indicate increasing integration of Edge TPUs in IoT devices for real-time AI applications, alongside the evolution of hybrid systems combining TPU and GPU architectures to optimize both training and inference workloads. Advancements in AI accelerators emphasize energy efficiency, scalability, and support for emerging AI models such as transformers and edge-based reinforcement learning.

Edge TPU vs GPU Infographic

difterm.com

difterm.com