Deep learning is a subset of neural networks that involves multiple hidden layers enabling the model to learn complex patterns from large datasets. Neural networks are the foundational architecture composed of interconnected nodes mimicking the human brain's structure, suitable for a variety of tasks with simpler frameworks. Deep learning enhances neural networks by increasing depth and computational power, significantly improving performance in image recognition, natural language processing, and other advanced applications.

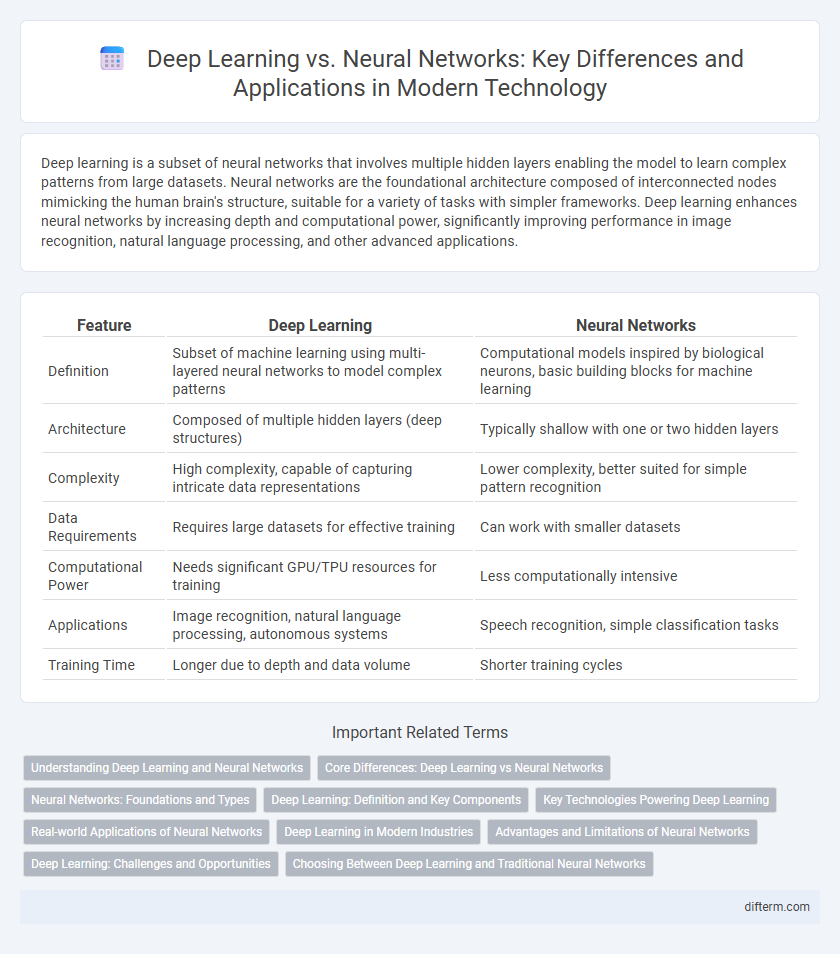

Table of Comparison

| Feature | Deep Learning | Neural Networks |

|---|---|---|

| Definition | Subset of machine learning using multi-layered neural networks to model complex patterns | Computational models inspired by biological neurons, basic building blocks for machine learning |

| Architecture | Composed of multiple hidden layers (deep structures) | Typically shallow with one or two hidden layers |

| Complexity | High complexity, capable of capturing intricate data representations | Lower complexity, better suited for simple pattern recognition |

| Data Requirements | Requires large datasets for effective training | Can work with smaller datasets |

| Computational Power | Needs significant GPU/TPU resources for training | Less computationally intensive |

| Applications | Image recognition, natural language processing, autonomous systems | Speech recognition, simple classification tasks |

| Training Time | Longer due to depth and data volume | Shorter training cycles |

Understanding Deep Learning and Neural Networks

Deep learning is a subset of machine learning that uses neural networks with multiple layers to model complex patterns in large datasets. Neural networks consist of interconnected nodes (neurons) that simulate the human brain's functioning, enabling automatic feature extraction and representation learning. Understanding deep learning involves grasping how these multilayered neural networks process data through forward and backward propagation to improve accuracy in tasks like image recognition and natural language processing.

Core Differences: Deep Learning vs Neural Networks

Deep learning is a subset of neural networks characterized by multiple hidden layers that enable automatic feature extraction and complex pattern recognition. Neural networks generally refer to simpler architectures with fewer layers, primarily designed for basic tasks like classification or regression. The core difference lies in deep learning's ability to process large-scale data through hierarchical learning, enhancing accuracy and scalability beyond traditional neural networks.

Neural Networks: Foundations and Types

Neural networks are computational models inspired by the human brain's structure, consisting of interconnected nodes called neurons that process data through layers. Foundational types include feedforward neural networks, which transmit data in one direction, and recurrent neural networks, designed for sequence data by incorporating feedback loops. Convolutional neural networks, another key type, specialize in image and spatial data processing, making them essential in computer vision tasks.

Deep Learning: Definition and Key Components

Deep learning is an advanced subset of machine learning that utilizes neural networks with multiple layers, known as deep neural networks, to model complex patterns in large datasets. Key components of deep learning include artificial neurons, layers (input, hidden, and output), activation functions, and optimization algorithms such as gradient descent. This framework enables automatic feature extraction and hierarchical representation learning, making it essential for applications like image recognition, natural language processing, and autonomous systems.

Key Technologies Powering Deep Learning

Deep learning is powered by advanced neural networks, particularly deep neural networks (DNNs) with multiple hidden layers that enable hierarchical feature extraction from large datasets. Key technologies include convolutional neural networks (CNNs) for image processing, recurrent neural networks (RNNs) for sequence data, and transformers for natural language understanding, all optimized through backpropagation and gradient descent algorithms. Hardware accelerators like GPUs and TPUs significantly enhance training efficiency and model scalability in deep learning applications.

Real-world Applications of Neural Networks

Neural networks power various real-world applications including image and speech recognition, natural language processing, and autonomous driving, due to their ability to model complex patterns and relationships within data. Deep learning, a subset of neural networks with multiple hidden layers, enhances these applications by improving accuracy and enabling advanced features like real-time translation and medical diagnosis. Industries such as healthcare, finance, and automotive leverage these technologies to automate tasks, detect anomalies, and improve decision-making processes.

Deep Learning in Modern Industries

Deep learning, a subset of neural networks, powers advanced applications in modern industries by enabling machines to learn from vast datasets with minimal human intervention. Its ability to automatically extract complex features has revolutionized sectors like healthcare, finance, and autonomous driving. The scalability and accuracy of deep learning models make them indispensable for real-time decision-making and predictive analytics.

Advantages and Limitations of Neural Networks

Neural networks excel in modeling complex, non-linear relationships, making them highly effective for tasks like image recognition and natural language processing. Their ability to learn from vast amounts of data enables improved accuracy and adaptability in diverse applications. However, neural networks often require significant computational resources and large labeled datasets, which can limit their usability in environments with constrained hardware or scarce data.

Deep Learning: Challenges and Opportunities

Deep learning, a subset of neural networks, faces significant challenges such as the need for large labeled datasets, high computational costs, and risk of overfitting in complex models. Despite these obstacles, deep learning offers opportunities to advance artificial intelligence through improved image recognition, natural language processing, and autonomous systems. Innovations in hardware acceleration, transfer learning, and unsupervised techniques are driving breakthroughs in scalability and efficiency.

Choosing Between Deep Learning and Traditional Neural Networks

Choosing between deep learning and traditional neural networks depends on the complexity of the task and available data. Deep learning models, characterized by multiple hidden layers, excel in handling large-scale datasets and extracting high-level features from unstructured data such as images and speech. Traditional neural networks, with fewer layers and simpler architectures, are more suitable for smaller datasets and tasks requiring faster training times and lower computational resources.

Deep Learning vs Neural Networks Infographic

difterm.com

difterm.com