Tensor Processing Units (TPUs) are specialized hardware accelerators designed specifically for machine learning tasks, delivering superior performance and efficiency in neural network computations compared to Graphics Processing Units (GPUs). While GPUs excel in parallel processing for a wide range of applications including graphics rendering and general-purpose computing, TPUs optimize matrix operations and low-precision arithmetic crucial for deep learning models. Choosing between TPUs and GPUs depends on the specific workload requirements, with TPUs providing faster training and inference speeds for large-scale AI deployments.

Table of Comparison

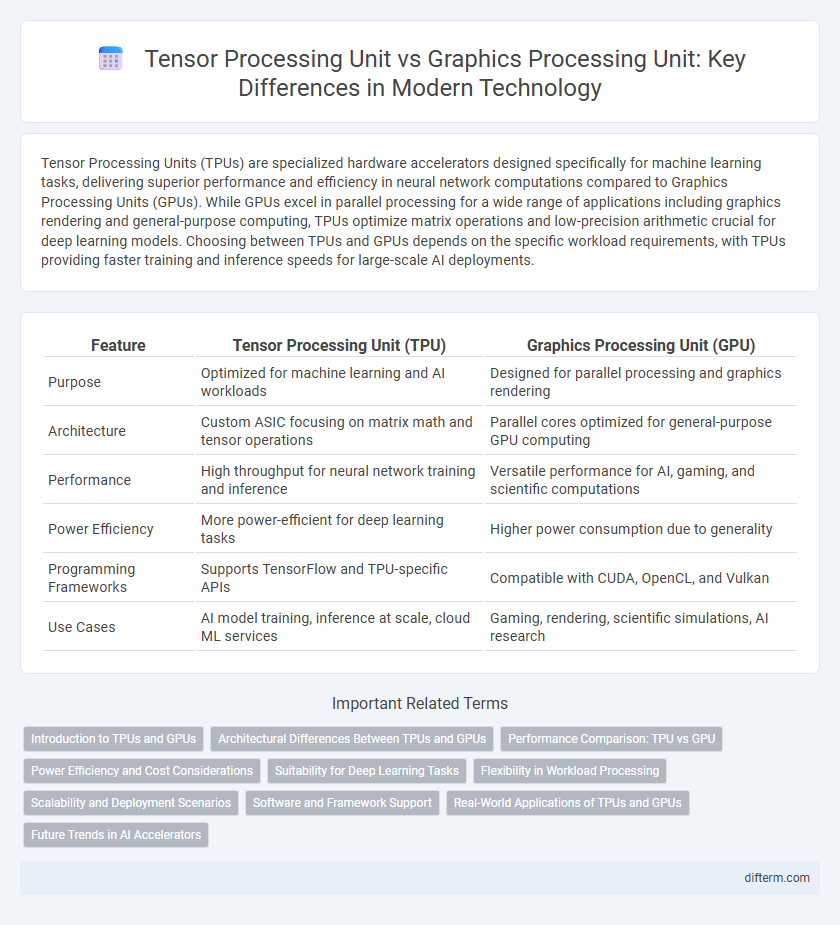

| Feature | Tensor Processing Unit (TPU) | Graphics Processing Unit (GPU) |

|---|---|---|

| Purpose | Optimized for machine learning and AI workloads | Designed for parallel processing and graphics rendering |

| Architecture | Custom ASIC focusing on matrix math and tensor operations | Parallel cores optimized for general-purpose GPU computing |

| Performance | High throughput for neural network training and inference | Versatile performance for AI, gaming, and scientific computations |

| Power Efficiency | More power-efficient for deep learning tasks | Higher power consumption due to generality |

| Programming Frameworks | Supports TensorFlow and TPU-specific APIs | Compatible with CUDA, OpenCL, and Vulkan |

| Use Cases | AI model training, inference at scale, cloud ML services | Gaming, rendering, scientific simulations, AI research |

Introduction to TPUs and GPUs

Tensor Processing Units (TPUs) are specialized hardware accelerators designed by Google specifically for accelerating machine learning workloads, particularly deep learning tasks involving tensor computations. Graphics Processing Units (GPUs), originally created for rendering graphics, have evolved into versatile parallel processors widely used for training and inference in neural networks due to their high throughput and extensive CUDA programming ecosystem. TPUs offer optimized matrix operations and energy efficiency for large-scale AI models, while GPUs provide flexible general-purpose parallel processing capabilities across diverse computing applications.

Architectural Differences Between TPUs and GPUs

Tensor Processing Units (TPUs) are specialized hardware accelerators designed specifically for machine learning workloads, featuring a systolic array architecture optimized for high-throughput matrix multiplications essential in neural network training and inference. Graphics Processing Units (GPUs) use a massively parallel architecture with thousands of smaller cores optimized for a wide range of tasks, including graphics rendering and general-purpose computation, offering flexibility but less specialized efficiency for tensor operations. TPUs incorporate on-chip memory and streamlined data paths to minimize latency and maximize throughput for deep learning tasks, whereas GPUs rely on shared memory hierarchies and more complex control logic to handle diverse computational workloads.

Performance Comparison: TPU vs GPU

Tensor Processing Units (TPUs) outperform Graphics Processing Units (GPUs) in specialized machine learning tasks due to their architecture optimized for high-throughput matrix operations and low-precision arithmetic. GPUs offer broader versatility and excel in parallel processing for graphics rendering and general-purpose AI workloads, but TPUs deliver superior efficiency and speed in training and inference of deep neural networks. Benchmark tests demonstrate TPUs achieving up to 15x faster execution in large-scale AI models compared to the latest GPU implementations.

Power Efficiency and Cost Considerations

Tensor Processing Units (TPUs) offer superior power efficiency compared to Graphics Processing Units (GPUs) by optimizing matrix operations for AI workloads, resulting in lower energy consumption per inference. Cost considerations heavily favor TPUs in large-scale machine learning deployments due to their specialized architecture that reduces operational expenses and total cost of ownership (TCO). While GPUs provide versatility across diverse applications, TPUs deliver optimal power-to-performance ratios, making them economically advantageous for targeted AI acceleration.

Suitability for Deep Learning Tasks

Tensor Processing Units (TPUs) are specifically designed for accelerating deep learning workloads, offering optimized matrix multiplication and high throughput for training and inference of neural networks. Graphics Processing Units (GPUs) provide versatility across various parallel computing tasks and are widely used for deep learning due to their ability to handle large-scale matrix operations efficiently. TPUs outperform GPUs in large-scale machine learning models with their architecture tailored for AI workloads, while GPUs maintain an edge in flexibility and broad software support.

Flexibility in Workload Processing

Tensor Processing Units (TPUs) excel in accelerating specific machine learning tasks due to their highly optimized architecture for deep learning operations. Graphics Processing Units (GPUs) offer greater flexibility in workload processing, efficiently handling a diverse range of parallel computing tasks beyond neural networks, including graphics rendering and scientific simulations. The adaptability of GPUs makes them suitable for various computing environments, while TPUs deliver superior performance in specialized AI model training and inference.

Scalability and Deployment Scenarios

Tensor Processing Units (TPUs) excel in scalability for large-scale machine learning workloads due to their architecture optimized for high-throughput matrix operations, enabling efficient deployment in cloud environments and data centers. Graphics Processing Units (GPUs) offer more flexibility across diverse deployment scenarios, including edge devices and on-premises servers, but may face scalability limits in distributed training compared to TPUs. Enterprises leverage TPUs for training massive neural networks at scale, while GPUs remain preferred for heterogeneous AI workloads requiring broader compatibility and development ecosystem support.

Software and Framework Support

Tensor Processing Units (TPUs) are specifically optimized for deep learning workloads and have seamless integration with TensorFlow, providing efficient acceleration for neural network training and inference. Graphics Processing Units (GPUs) support a broader range of machine learning frameworks such as PyTorch, Caffe, and MXNet, offering greater flexibility for diverse AI applications. Software ecosystems for GPUs are more mature and widely adopted, making them suitable for both research and production environments, while TPUs excel in scenarios tightly coupled with Google Cloud services.

Real-World Applications of TPUs and GPUs

Tensor Processing Units (TPUs) excel in accelerating machine learning tasks, particularly in deep learning applications like natural language processing, image recognition, and recommendation systems deployed by tech giants such as Google. Graphics Processing Units (GPUs) remain essential for rendering graphics in gaming, video editing, and parallel computing tasks, while also supporting AI workloads with broader compatibility across frameworks like TensorFlow and PyTorch. Real-world deployment of TPUs often targets data centers optimizing neural network inference and training, whereas GPUs find extensive use in both consumer devices and high-performance computing environments.

Future Trends in AI Accelerators

Tensor Processing Units (TPUs) are expected to dominate future AI accelerators due to their superior efficiency in handling large-scale machine learning models and lower power consumption compared to Graphics Processing Units (GPUs). Advances in TPU architectures aim to accelerate training and inference for deep neural networks, pushing AI capabilities in edge computing and data centers. Meanwhile, GPUs continue evolving with enhanced parallelism and versatility, maintaining relevance in broader AI workloads and graphics rendering applications.

tensor processing unit vs graphics processing unit Infographic

difterm.com

difterm.com