Asynchronous I/O allows programs to initiate input/output operations without waiting for completion, enhancing efficiency by enabling other tasks to run concurrently. Synchronous I/O requires processes to wait until the operation finishes, potentially causing delays and reduced performance. In technology pet applications, choosing asynchronous I/O can improve responsiveness and user experience by minimizing blocking and latency.

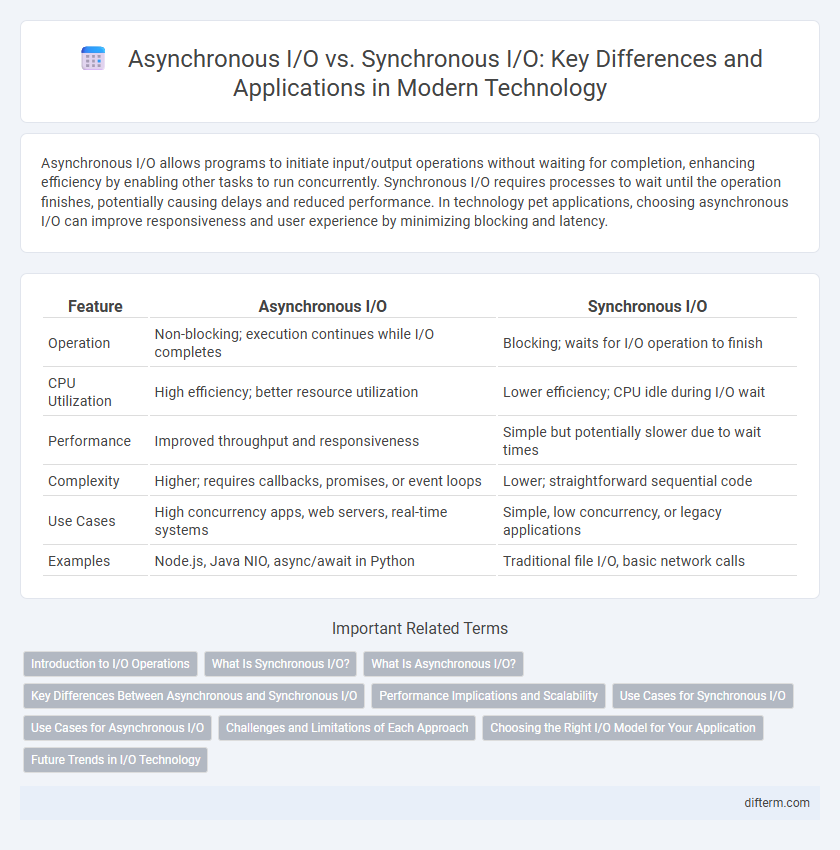

Table of Comparison

| Feature | Asynchronous I/O | Synchronous I/O |

|---|---|---|

| Operation | Non-blocking; execution continues while I/O completes | Blocking; waits for I/O operation to finish |

| CPU Utilization | High efficiency; better resource utilization | Lower efficiency; CPU idle during I/O wait |

| Performance | Improved throughput and responsiveness | Simple but potentially slower due to wait times |

| Complexity | Higher; requires callbacks, promises, or event loops | Lower; straightforward sequential code |

| Use Cases | High concurrency apps, web servers, real-time systems | Simple, low concurrency, or legacy applications |

| Examples | Node.js, Java NIO, async/await in Python | Traditional file I/O, basic network calls |

Introduction to I/O Operations

I/O operations involve the transfer of data between a computer's central processing unit (CPU) and peripheral devices such as disks, networks, or user interfaces. Synchronous I/O requires the CPU to wait for the operation to complete before continuing execution, leading to potential inefficiencies and idle time. Asynchronous I/O allows the CPU to initiate an operation and proceed without waiting, improving resource utilization and system responsiveness by handling multiple I/O requests concurrently.

What Is Synchronous I/O?

Synchronous I/O is a method where input/output operations block the executing thread until the operation completes, causing the system to wait for data transfer before proceeding. This approach simplifies programming by ensuring tasks are completed sequentially but can lead to inefficiencies in high-latency environments or when handling multiple I/O requests concurrently. Systems relying on synchronous I/O often experience reduced throughput compared to asynchronous models that allow other processing to continue during I/O operations.

What Is Asynchronous I/O?

Asynchronous I/O allows a program to initiate an input/output operation and continue executing other tasks without waiting for the operation to complete, improving overall system efficiency. It uses non-blocking system calls and event-driven programming to handle multiple I/O operations concurrently, reducing latency and enhancing responsiveness. This approach is widely used in high-performance servers, real-time applications, and systems requiring scalable multitasking.

Key Differences Between Asynchronous and Synchronous I/O

Asynchronous I/O allows operations to proceed without waiting for completion, enhancing system responsiveness and resource utilization by enabling other tasks to run concurrently. Synchronous I/O, in contrast, requires the process to wait until the current I/O operation finishes, potentially causing blocking and reduced efficiency in high-latency environments. Key differences include non-blocking behavior in asynchronous I/O versus blocking in synchronous I/O, event-driven notifications versus direct return, and better scalability in asynchronous models for I/O-intensive applications.

Performance Implications and Scalability

Asynchronous I/O improves performance by allowing multiple operations to proceed without waiting for each to complete, reducing idle CPU time and increasing throughput in high-concurrency environments. Synchronous I/O blocks processes until completion, which can lead to bottlenecks and limited scalability under heavy workloads, especially in network or disk-intensive applications. Systems leveraging asynchronous I/O can handle more concurrent tasks efficiently, making them ideal for scalable cloud services and real-time data processing platforms.

Use Cases for Synchronous I/O

Synchronous I/O is ideal for applications requiring simple, predictable execution flow, such as command-line tools and batch processing systems where operations complete sequentially. It benefits scenarios with low concurrency demands, like embedded systems or single-user applications, ensuring data integrity without the complexity of context switching. Synchronous I/O also fits real-time systems where immediate data availability is critical, providing consistent timing and resource control.

Use Cases for Asynchronous I/O

Asynchronous I/O is ideal for high-performance web servers, real-time gaming applications, and data streaming services where low latency and non-blocking operations are critical. It enables efficient resource utilization by allowing multiple I/O operations to proceed simultaneously without waiting for each to complete. This model excels in scenarios involving network communications, database queries, and file system access that require high concurrency and scalability.

Challenges and Limitations of Each Approach

Synchronous I/O operations often lead to thread blocking, causing inefficiencies and reduced system responsiveness in high-concurrency environments. Asynchronous I/O mitigates blocking by enabling non-blocking calls, but introduces complexity in error handling, callback management, and increased difficulty in debugging. Both approaches face challenges in resource management, with synchronous I/O struggling under heavy load and asynchronous I/O requiring sophisticated concurrency control to avoid race conditions and deadlocks.

Choosing the Right I/O Model for Your Application

Choosing the right I/O model depends on your application's performance requirements and resource constraints. Asynchronous I/O enables non-blocking operations, improving scalability and responsiveness in high-concurrency environments, while synchronous I/O offers simpler coding and predictable execution flow, ideal for low-latency or real-time tasks. Evaluating factors such as CPU utilization, latency tolerance, and concurrency levels ensures optimal selection between asynchronous and synchronous I/O.

Future Trends in I/O Technology

Future trends in I/O technology emphasize the expansion of asynchronous I/O due to its superior efficiency in handling multiple simultaneous data streams without blocking operations. Advances in hardware interfaces, such as NVMe over Fabrics and persistent memory, are expected to further enhance asynchronous I/O performance by reducing latency and increasing throughput. Machine learning integration for predictive I/O scheduling will optimize resource allocation, making asynchronous I/O more adaptive and scalable in cloud-native and edge computing environments.

asynchronous I/O vs synchronous I/O Infographic

difterm.com

difterm.com