Serverless functions offer automatic scaling and cost efficiency by charging only for actual execution time, making them ideal for event-driven applications and microservices. Containerized services provide greater control over the runtime environment, enabling consistent deployment across multiple platforms with the ability to manage dependencies and configurations explicitly. Choosing between serverless functions and containerized services depends on specific workload requirements, performance needs, and operational preferences.

Table of Comparison

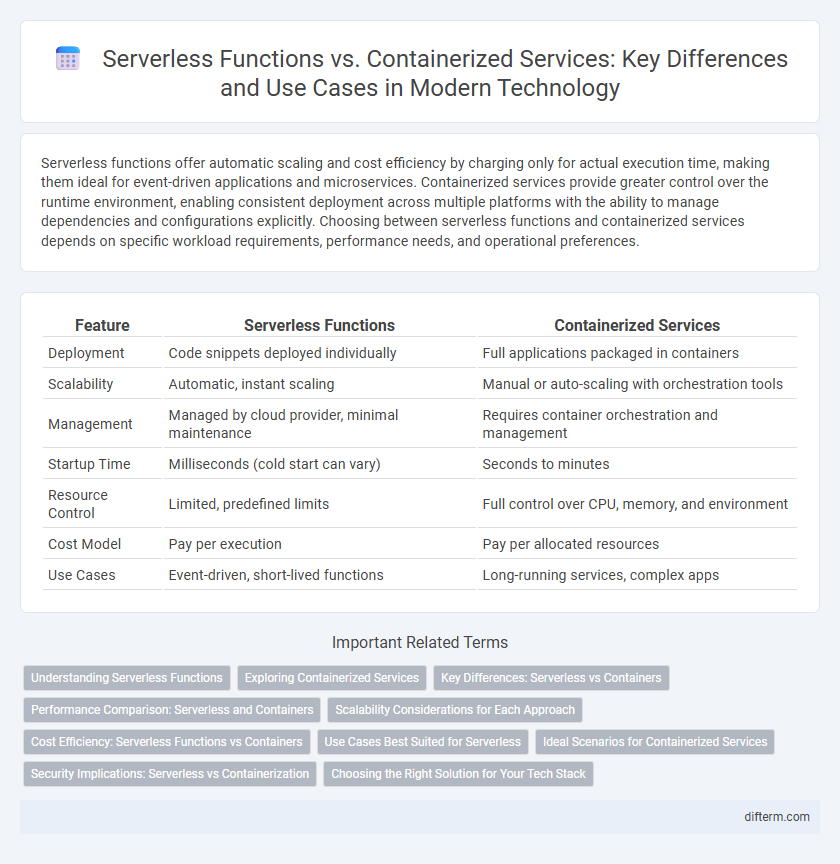

| Feature | Serverless Functions | Containerized Services |

|---|---|---|

| Deployment | Code snippets deployed individually | Full applications packaged in containers |

| Scalability | Automatic, instant scaling | Manual or auto-scaling with orchestration tools |

| Management | Managed by cloud provider, minimal maintenance | Requires container orchestration and management |

| Startup Time | Milliseconds (cold start can vary) | Seconds to minutes |

| Resource Control | Limited, predefined limits | Full control over CPU, memory, and environment |

| Cost Model | Pay per execution | Pay per allocated resources |

| Use Cases | Event-driven, short-lived functions | Long-running services, complex apps |

Understanding Serverless Functions

Serverless functions enable developers to run code without managing infrastructure, automatically scaling based on demand and charging only for execution time, which optimizes cost and resource efficiency. Unlike containerized services that require managing deployment environments and scaling, serverless abstracts server management by executing discrete functions triggered by events. This approach accelerates development cycles and simplifies maintenance, making it ideal for applications with unpredictable workloads and event-driven processes.

Exploring Containerized Services

Containerized services offer greater control over application environments by encapsulating software and its dependencies within isolated containers, enabling consistent deployment across multiple platforms. These services enhance scalability and resource efficiency by leveraging orchestration tools like Kubernetes for automated container management and load balancing. Containerization supports microservices architecture, facilitating continuous integration and continuous deployment (CI/CD) pipelines, which accelerate development cycles and improve fault isolation.

Key Differences: Serverless vs Containers

Serverless functions execute code in response to events without managing infrastructure, offering automatic scaling and cost-efficiency based on execution time. Containerized services run applications in isolated environments with persistent resources, providing greater control over runtime, dependencies, and scaling configurations. The key differences lie in operational management, where serverless abstracts infrastructure while containers require orchestration tools like Kubernetes for deployment and scaling.

Performance Comparison: Serverless and Containers

Serverless functions offer rapid auto-scaling and reduced latency for short-duration tasks, optimizing performance in event-driven applications. Containerized services provide consistent resource allocation and lower cold start times, enhancing efficiency for long-running processes and complex workloads. The choice depends on workload characteristics, with serverless excelling in bursty, stateless executions and containers delivering sustained performance under heavy loads.

Scalability Considerations for Each Approach

Serverless functions automatically scale based on incoming request volume, enabling rapid and cost-efficient handling of variable workloads without manual intervention. Containerized services require explicit orchestration through platforms like Kubernetes to scale instances, providing more control over resource allocation but demanding additional management and configuration. Both approaches address scalability differently, with serverless optimizing for on-demand elasticity and containers excelling in predictable, long-running applications requiring consistent performance.

Cost Efficiency: Serverless Functions vs Containers

Serverless functions offer cost efficiency by charging only for actual execution time and automatically scaling resources, eliminating the need for constant server management and idle capacity expenses. Containerized services typically incur ongoing costs due to reserved infrastructure and fixed resource allocation, even during periods of low usage. For workloads with unpredictable or intermittent demands, serverless architectures minimize expenses by adapting instantly to traffic patterns without over-provisioning.

Use Cases Best Suited for Serverless

Serverless functions excel in event-driven applications such as real-time data processing, API backends, and automated workflows where rapid scaling and minimal infrastructure management are crucial. They are ideal for intermittent workloads with unpredictable traffic patterns, allowing cost-efficient execution without the overhead of provisioning servers. Use cases like IoT data ingestion, chatbots, and lightweight microservices benefit from serverless architecture due to its fine-grained resource allocation and automatic scaling capabilities.

Ideal Scenarios for Containerized Services

Containerized services are ideal for complex applications requiring consistent environments across development, testing, and production, enabling seamless scalability and orchestration with Kubernetes or Docker Swarm. They support microservices architectures, allowing independent deployment and management of services with fine-grained resource allocation and persistent storage needs. Enterprises benefit from containerization when handling stateful applications, long-running processes, and workloads demanding high customization, security, and control over the runtime environment.

Security Implications: Serverless vs Containerization

Serverless functions isolate execution environments at the function level, minimizing attack surfaces by abstracting underlying infrastructure, whereas containerized services provide broader control but require diligent security management across OS, runtime, and application layers. Containerization demands rigorous patching, vulnerability scanning, and network segmentation to mitigate risks such as container escape and privilege escalation, while serverless platforms inherit security responsibilities from cloud providers yet remain susceptible to function-level threats like injection attacks and excessive permissions. Evaluating security implications involves assessing trade-offs between reduced operational overhead in serverless models and granular security controls available in containerized deployments.

Choosing the Right Solution for Your Tech Stack

Serverless functions offer scalability and cost-efficiency by executing code on demand without managing infrastructure, making them ideal for event-driven applications and microservices. Containerized services provide greater control over application environments and dependencies, supporting complex workloads and consistent performance across development, testing, and production stages. Assessing factors like operational overhead, workload complexity, and scalability requirements is crucial when selecting between serverless functions and containerized services to optimize your technology stack.

Serverless functions vs Containerized services Infographic

difterm.com

difterm.com